Overview of some often used Oracle 10g/11g/12c SQL Statements

Version : 3.6

Date : 30 November 2016

By : Albert van der Sel

Remark : Please refresh the page to see any updates.

It may take a few seconds to load this html page...

This note is organized in 2 parts:

Part 1: 10g/11g Statements (practically all will work in 12c as well)

Part 2: Specific 12c Statements

Part 1 was created while 10g and 11g were still courant versions of the RDBMS.

However, since 12c is availble, the note had to be extended with additional 12c specifics. Hence Part 2.

Part 1:

Main Contents Part 1: 10g/11g

1. ENABLE AND DISABLE A CONSTRAINT

2. DISABLE AND ENABLE TRIGGER

3. PROCESSES AND LOCKS

4. QUICK CHECK DATABASE NAME AND INSTANCE NAME

5. QUICK CHECK ON DATABASE FILES

6. QUICK CHECK ON BACKUP/RECOVERY RELATED EVENTS

7. EXAMPLES OF SOME COMMON CREATE STATEMENTS

8. GET THE SQL ISSUED AGAINST THE DATABASE

9. GET THE SGA PROPERTIES

10. CREATE AN "SPFILE.ORA" FROM AN "INIT.ORA" AND THE OTHER WAY AROUND

11. CREATE A COPY TABLE WITH ALL DATA

12. A FEW SIMPLE WAYS TO TRACE A SESSION

13. A FEW SIMPLE WAYS TO DETECT WAITS

14. CREATE A DATABASE USER

15. FINDING INVALID OBJECTS AND REPAIR

16. CREATING AND REBUILDING INDEXES

17. GETTING PRODUCT/PARAMETER INFORMATION

18. KILLING AN ORACLE SESSION

19. 9i,10g,11g INIT.ORA/SPFILE.ORA initialization parameters

20. DIAGNOSTIC TOOLS IN 9i: A FEW WORDS ON STATSPACK

21. USING A CURSOR IN PL/SQL LOOPS

22. EXECUTING SCRIPTS FROM THE "SQL>" PROMPT

23. USING CONTROLS, AND "FOR.." AND "WHILE.." LOOPS IN PL/SQL

24. HOW TO PUT SQLPLUS OUTPUT IN A SHELL VARIABLE

25. INSTANCE STARTUP OPTIONS

26. A FEW 10g,11g RMAN NOTES

27. HOW TO VIEW IF THE DATABASE IS DOING A LARGE ROLLBACK

28. A SIMPLE WAY TO CLONE A 9i/10g/11g DATABASE

29. A FEW NOTES ON 10g/11g ADDM and AWR

30. A FEW connect STRING EXAMPLES

31. UNSTRUCTURED PLSQL txt FILE

32. HOW TO SOLVE BLOCK CORRUPTION

33. BIRDS-EYE VIEW ON INSTANCE STRUCTURE AND PROCESSES.

34. Appendices.

Part 2:

Main Contents Part 2: 12c (and some also applies to 10g/11g)

35. EM CLOUD CONTROL 12c or EM EXPRESS 12c.

36. CDB AND PDB's IN 12c.

37. CLONING A DATABASE IN 12c.

38. FULL DATABASE CACHING IN 12c.

39. CREATE DATABASE USER IN 12c.

40. ASM IN 10g/11g AND 12c.

41. RMAN IN 12c (SEE ALSO SECTION 26 FOR 10g/11g).

42. ADR, LOGFILES, DIAGNOSTICS (11g/12c).

43. MOVING DATABASE FILES.

44. DATAGUARD 11g/12c.

45. SHOW OFTEN ACCESSED TABLES AND INDEXES (10g/11g/12c).

46. SHOW PERMISSIONS AND GRANT STATEMENTS (10g/11g/12c).

47. EXPDP AND IMPDP (10g/11g/12c).

1. ENABLE AND DISABLE A CONSTRAINT:

-- Disable and enable one Constraint:

ALTER TABLE table_name enable CONSTRAINT constraint_name;

ALTER TABLE table_name disable CONSTRAINT constraint_name;

-- Make a list of statements: Disable and enable ALL Foreign Key (type=R) constraints in one schema (like e.g. HARRY):

SELECT 'ALTER TABLE HARRY.'||table_name||' enable constraint '||constraint_name||';'

FROM DBA_CONSTRAINTS

WHERE owner='HARRY' AND constraint_type='R';

SELECT 'ALTER TABLE HARRY.'||table_name||' disable constraint '||constraint_name||';'

FROM DBA_CONSTRAINTS

WHERE owner='HARRY' AND constraint_type='R';

More on "dynamic" statements:

The statements you see above, generate listings of actual statements.

This will help you if you must alter a large list of objects, or if you must grant or revoke permissions etc.. etc..

Here are a few other examples:

-- create synonyms:

select 'create or replace public synonym '||table_name||'for HR.'||table_name||';'

from dba_tables where owner='HR';

-- INDEX rebuild:

SELECT 'alter index ALBERT.'||INDEX_NAME||' rebuild;'

from dba_indexes where owner='ALBERT';

-- Granting permissions to a role or user for a set of objects:

SELECT 'GRANT SELECT ON HR.'||table_name||' TO ROLE_READONLY;' from dba_tables

where owner='HR';

SELECT 'GRANT SELECT ON HR.'||view_name||' TO ROLE_READONLY;' from dba_views

where owner='HR';

SELECT 'GRANT SELECT, INSERT, UPDATE, DELETE ON NiceApp.'||table_name||' TO Albert;' from dba_tables

where owner='NiceApp';

2. DISABLE AND ENABLE TRIGGER:

-- Disable and enable one trigger:

ALTER TRIGGER trigger_name DISABLE;

ALTER TRIGGER trigger_name ENABLE;

-- Or in 1 time for all triggers on a table:

ALTER TABLE table_name DISABLE ALL TRIGGERS;

ALTER TABLE table_name ENABLE ALL TRIGGERS;

-- Drop a trigger:

DROP TRIGGER trigger_name;

3. PROCESSES AND LOCKS:

3.1 QUICK CHECK ON PROCESSES:

set linesize=1000

set pagesize=1000

-- v$session mainly shows characteristics of Oracle Sessions, v$process is more oriented

-- to OS processes.

-- The below two (similar) queries "connects" the Oracle Session ID (sid) to the OS process (spid):

SELECT

p.spid AS OS_PID,

p.pid,

s.sid AS ORACLE_SID,

p.addr,s.paddr,

substr(s.username, 1, 15) AS DBUSER,

substr(s.schemaname, 1, 15),

s.command,

substr(s.osuser, 1, 15) AS OSUSER,

substr(s.machine, 1, 15) AS MACHINE,

substr(s.program,1,15) AS PROGRAM

FROM v$session s, v$process p

WHERE s.paddr=p.addr

SELECT

p.spid AS OS_PID,

s.sid AS ORACLE_SID,

substr(s.osuser, 1, 15) AS OSUSER,

substr(s.program,1,55) AS PROGRAM,

substr(s.module,1,55) AS MODULE

FROM v$session s, v$process p

WHERE s.paddr=p.addr;

-- Short version:

select p.spid, s.sid, s.osuser, s.program from

v$process p, v$session s where p.addr=s.paddr;

-- Listing characteristics of Oracle Sessions (v$session):

SELECT

sid, serial#, substr(username,1,15), substr(osuser,1,15), LOCKWAIT, substr(program,1,30), substr(module,1,30)

FROM v$session;

SELECT

sid, serial#, command,substr(username, 1, 15), substr(osuser,1,15), sql_address,LOCKWAIT,

to_char(logon_time, 'DD-MM-YYYY;HH24:MI'), substr(program, 1, 30)

FROM v$session;

-- background processes:

SQL> SELECT paddr, name, substr(description,1,40) FROM v$bgprocess;

SQL> SELECT pid, spid, program, background FROM v$process WHERE BACKGROUND=1;

3.2 QUICK CHECK ON LOCKS:

SELECT d.OBJECT_ID, substr(OBJECT_NAME,1,20), l.SESSION_ID, l.ORACLE_USERNAME, l.LOCKED_MODE

FROM v$locked_object l, dba_objects d

WHERE d.OBJECT_ID=l.OBJECT_ID;

SELECT * FROM DBA_WAITERS;

SELECT waiting_session, holding_session, lock_type, mode_held

FROM dba_waiters;

3.3 QUICK CHECK ON TEMP:

select total_extents, used_extents, total_extents, current_users, tablespace_name

from v$sort_segment;

select username, user, sqladdr, extents, tablespace from v$sort_usage;

3.4 QUICK CHECK ON ACTIVITY UNDO:

SELECT a.sid, a.saddr, b.ses_addr, a.username, b.xidusn, b.used_urec, b.used_ublk

FROM v$session a, v$transaction b

WHERE a.saddr = b.ses_addr;

3.5 QUICK CHECK ON CPU USAGE

select ss.username, se.SID, se.SERIAL#, VALUE/100 cpu_usage_seconds

from v$session ss, v$sesstat se, v$statname sn

where

se.STATISTIC# = sn.STATISTIC#

and

NAME like '%CPU used by this session%'

and

se.SID = ss.SID

and

ss.status='ACTIVE'

and

ss.username is not null

order by VALUE desc;

select v.sql_text, v.FIRST_LOAD_TIME, v.PARSING_SCHEMA_ID, v.DISK_READS, v.ROWS_PROCESSED, v.CPU_TIME,

b.username from

v$sqlarea v, dba_users b

where v.FIRST_LOAD_TIME > '2017-06-26'

and v.PARSING_SCHEMA_ID=b.user_id

order by v.CPU_TIME desc ;

4. QUICK CHECK DATABASE NAME AND INSTANCE NAME:

set linesize=1000

set pagesize=1000

SELECT * FROM v$database;

SELECT DBID, NAME, CREATED, LOG_MODE, OPEN_MODE FROM v$database;

SELECT * FROM v$instance;

SELECT INSTANCE_NAME, HOST_NAME,VERSION, STARTUP_TIME, STATUS FROM v$instance;

5. QUICK CHECKS ON DATABASE FILES:

5.1 CONTROLFILES:

select * from v$controlfile;

5.2 REDO LOG FILES:

select * from v$log;

select * from v$logfile;

5.3 DATA FILES:

SELECT file_id, substr(file_name, 1, 70), substr(tablespace_name,1,50), status FROM dba_data_files;

SELECT file_id, substr(file_name, 1, 70), bytes, blocks, autoextensible FROM dba_data_files;

SELECT file#, status, substr(name, 1, 70) FROM V$DATAFILE;

5.4 FREE/USED SPACE IN TABLESPACES:

SELECT Total.name "Tablespace Name",

Free_space, (total_space-Free_space) Used_space, total_space

FROM

(SELECT tablespace_name, sum(bytes/1024/1024) Free_Space

FROM sys.dba_free_space

GROUP BY tablespace_name

) Free,

(SELECT b.name, sum(bytes/1024/1024) TOTAL_SPACE

FROM sys.v_$datafile a, sys.v_$tablespace B

WHERE a.ts# = b.ts#

GROUP BY b.name

) Total

WHERE Free.Tablespace_name = Total.name;

6. QUICK CHECK ON BACKUP/RECOVERY RELATED EVENTS:

SELECT * FROM v$backup;

SELECT file#, status, substr(name, 1, 70), checkpoint_change# FROM v$datafile;

SELECT file#, status, checkpoint_change# FROM v$datafile_header;

SELECT substr(name,1,60), recover, fuzzy, checkpoint_change#, resetlogs_change#, resetlogs_time

FROM v$datafile_header;

SELECT name, open_mode, checkpoint_change#, ARCHIVE_CHANGE# FROM v$database;

SELECT GROUP#,THREAD#,SEQUENCE#,MEMBERS,ARCHIVED,STATUS,FIRST_CHANGE# FROM v$log;

SELECT GROUP#,substr(member,1,70) FROM v$logfile;

SELECT * FROM v$log_history;

SELECT * FROM v$recover_file;

SELECT * FROM v$recovery_log;

SELECT first_change#, next_change#, sequence#, archived, substr(name, 1, 50)

FROM V$ARCHIVED_LOG;

SELECT status,resetlogs_change#,resetlogs_time,checkpoint_change#,

to_char(checkpoint_time, 'DD-MON-YYYY HH24:MI:SS') as checkpoint_time,count(*)

FROM v$datafile_header

group by status, resetlogs_change#, resetlogs_time, checkpoint_change#, checkpoint_time

order by status, checkpoint_change#, checkpoint_time ;

SELECT LF.member, L.group#, L.thread#, L.sequence#, L.status,

L.first_change#, L.first_time, DF.min_checkpoint_change#

FROM v$log L, v$logfile LF,

(select min(checkpoint_change#) min_checkpoint_change#

from v$datafile_header

where status='ONLINE') DF

WHERE LF.group# = L.group#

AND L.first_change# >= DF.min_checkpoint_change#;

SELECT * FROM V$RECOVERY_FILE_DEST;

SELECT * FROM V$FLASH_RECOVERY_AREA_USAGE;

SELECT V1.GROUP#, MEMBER, SEQUENCE#, FIRST_CHANGE#

FROM V$LOG V1, V$LOGFILE V2

WHERE V1.GROUP# = V2.GROUP# ;

SELECT FILE#, CHANGE# FROM V$RECOVER_FILE;

select al.sequence#

from v$archived_log al, v$log rl

where al.sequence# = rl.sequence# (+)

and al.thread# = rl.thread# (+)

and ( rl.status = 'INACTIVE'

or rl.status is null

)

and al.deleted = 'NO'

order by al.sequence#

SELECT RECOVERY_ESTIMATED_IOS FROM V$INSTANCE_RECOVERY;

7. EXAMPLES OF SOME COMMON CREATE STATEMENTS :

7.1 CREATE TABLESPACE:

CREATE TABLESPACE STAGING DATAFILE 'C:\ORADATA\TEST11G\STAGING.DBF' SIZE 5000M

EXTENT MANAGEMENT LOCAL AUTOALLOCATE

SEGMENT SPACE MANAGEMENT AUTO;

CREATE TABLESPACE CISTS_01 DATAFILE '/u07/oradata/spldevp/cists_01.dbf' SIZE 1200M

EXTENT MANAGEMENT LOCAL UNIFORM SIZE 128K;

7.2 CREATE TABLE (heap organized):

CREATE TABLE employees

(

employee_id NUMBER(6),

first_name VARCHAR2(20),

last_name VARCHAR2(25) NOT NULL,

email VARCHAR2(25) NOT NULL,

phone_number VARCHAR2(20),

hire_date DATE CONSTRAINT emp_hire_date_nn NOT NULL,

job_id VARCHAR2(10) CONSTRAINT emp_job_nn NOT NULL,

salary NUMBER(8,2),

commission_pct NUMBER(2,2),

manager_id NUMBER(6),

department_id NUMBER(4),

CONSTRAINT emp_salary_min CHECK (salary > 0),

CONSTRAINT emp_email_uk UNIQUE (email)

) TABLESPACE USERS;

ALTER TABLE employees

ADD (

CONSTRAINT emp_emp_id_pk PRIMARY KEY (employee_id),

CONSTRAINT emp_dept_fk FOREIGN KEY (department_id) REFERENCES departments (department_id),

CONSTRAINT emp_job_fk FOREIGN KEY (job_id) REFERENCES jobs (job_id),

CONSTRAINT emp_manager_fk FOREIGN KEY (manager_id) REFERENCES employees (manager_id)

) ;

CREATE TABLE hr.admin_emp (

empno NUMBER(5) PRIMARY KEY,

ename VARCHAR2(15) NOT NULL,

ssn NUMBER(9) ENCRYPT,

job VARCHAR2(10),

mgr NUMBER(5),

hiredate DATE DEFAULT (sysdate),

photo BLOB,

sal NUMBER(7,2),

hrly_rate NUMBER(7,2) GENERATED ALWAYS AS (sal/2080),

comm NUMBER(7,2),

deptno NUMBER(3) NOT NULL,

CONSTRAINT admin_dept_fkey REFERENCES hr.departments

(department_id))

TABLESPACE admin_tbs

STORAGE ( INITIAL 50K);

7.3 OBJECT TABLE:

CREATE TYPE department_typ AS OBJECT

( d_name VARCHAR2(100),

d_address VARCHAR2(200) );

CREATE TABLE departments_obj_t OF department_typ;

INSERT INTO departments_obj_t

VALUES ('hr', '10 Main St, Sometown, CA');

7.4 GLOBAL TEMPORARY TABLE:

CREATE GLOBAL TEMPORARY TABLE my_temp_table (

column1 NUMBER,

column2 NUMBER

) ON COMMIT DELETE ROWS;

CREATE GLOBAL TEMPORARY TABLE my_temp_table (

column1 NUMBER,

column2 NUMBER

) ON COMMIT PRESERVE ROWS;

7.5 EXTERNAL TABLE:

CREATE OR REPLACE DIRECTORY ext AS 'c:\external';

GRANT READ ON DIRECTORY ext TO public;

CREATE TABLE ext_tab (

empno CHAR(4),

ename CHAR(20),

job CHAR(20),

deptno CHAR(2))

ORGANIZATION EXTERNAL (

TYPE oracle_loader

DEFAULT DIRECTORY ext

ACCESS PARAMETERS (

RECORDS DELIMITED BY NEWLINE

BADFILE 'bad_%a_%p.bad'

LOGFILE 'log_%a_%p.log'

FIELDS TERMINATED BY ','

MISSING FIELD VALUES ARE NULL

REJECT ROWS WITH ALL NULL FIELDS

(empno, ename, job, deptno))

LOCATION ('demo1.dat')

)

7.6 CREATE CLUSTER:

Index Cluster:

CREATE CLUSTER employees_departments_cluster

(department_id NUMBER(4))

SIZE 512;

CREATE INDEX idx_emp_dept_cluster ON CLUSTER employees_departments_cluster;

-- Now, "add" tables to the cluster like for example:

CREATE TABLE employees ( ... )

CLUSTER employees_departments_cluster (department_id);

CREATE TABLE departments ( ... )

CLUSTER employees_departments_cluster (department_id);

7.7 INDEX-ORGANIZED TABLE:

-- Index Organized Tables are tables that, unlike heap tables, are organized like B*Tree indexes.

CREATE TABLE labor_hour (

WORK_DATE DATE,

EMPLOYEE_NO VARCHAR2(8),

CONSTRAINT pk_labor_hour

PRIMARY KEY (work_date, employee_no))

ORGANIZATION INDEX;

7.8 DATABASE LINK:

-- To run queries against remote tables in another database, you can create a "database link":

-- Example:

CREATE Public Database Link MYLINK

connect To scott Identified By tiger using sales;

Here, "sales" is the alias as listed in the tnsnames.ora, which corresponds to some local

or remote instance.

Also, scott is a schema in that remote database, which owns the tables and views we are

interrested in.

Now suppose that EMP (scott.EMP) is a table in the remote database, then we can query it using

a syntax like:

SELECT * from EMP@MYLINK;

So, the SELECT statement simply uses identifiers like "OBJECT_NAME@DATABASELINK_NAME"

in the FROM clause.

Note: you can also create a "synonym" for such remote tables, which make them

to "appear" to exist locally.

7.9 SEQUENCE:

CREATE SEQUENCE sequence name

INCREMENT BY increment number

START WITH start number

MAXVALUE maximum value

CYCLE ;

CREATE SEQUENCE SEQ_SOURCE

INCREMENT BY 1

START WITH 1

MAXVALUE 9999999

NOCYCLE;

create table SOURCE

(

id number(10) not null,

longrecord varchar2(128));

CREATE OR REPLACE TRIGGER tr_source

BEFORE INSERT ON SOURCE FOR EACH ROW

BEGIN

SELECT seq_source.NEXTVAL INTO :NEW.id FROM dual;

END;

/

7.10 Partitioned Table:

-- RANGE PARTITIONED:

CREATE TABLE sales

( invoice_no NUMBER,

sale_year INT NOT NULL,

sale_month INT NOT NULL,

sale_day INT NOT NULL )

PARTITION BY RANGE (sale_year, sale_month, sale_day)

( PARTITION sales_q1 VALUES LESS THAN (1999, 04, 01)

TABLESPACE tsa,

PARTITION sales_q2 VALUES LESS THAN (1999, 07, 01)

TABLESPACE tsb,

PARTITION sales_q3 VALUES LESS THAN (1999, 10, 01)

TABLESPACE tsc,

PARTITION sales_q4 VALUES LESS THAN (2000, 01, 01)

TABLESPACE tsd );

-- A row with SALE_YEAR=1999, SALE_MONTH=8, and SALE_DAY=1 has a partitioning key of (1999, 8, 1)

-- and would be stored in partition SALES_Q3 in Tablespace tsc.

-- HASH PARTITIONED:

CREATE TABLE scubagear

(id NUMBER,

name VARCHAR2 (60))

PARTITION BY HASH (id)

PARTITIONS 4

STORE IN (gear1, gear2, gear3, gear4);

-- LIST PARTITIONED:

CREATE TABLE q1_sales_by_region

(deptno number,

deptname varchar2(20),

quarterly_sales number(10, 2),

state varchar2(2))

PARTITION BY LIST (state)

(PARTITION q1_northwest VALUES ('OR', 'WA'),

PARTITION q1_southwest VALUES ('AZ', 'UT', 'NM'),

PARTITION q1_northeast VALUES ('NY', 'VM', 'NJ'),

PARTITION q1_southeast VALUES ('FL', 'GA'),

PARTITION q1_northcentral VALUES ('SD', 'WI'),

PARTITION q1_southcentral VALUES ('OK', 'TX'));

-- Composite Range-Hash Partitioning:

CREATE TABLE scubagear (equipno NUMBER, equipname VARCHAR(32), price NUMBER)

PARTITION BY RANGE (equipno) SUBPARTITION BY HASH(equipname)

SUBPARTITIONS 8 STORE IN (ts1, ts2, ts3, ts4) -- tablespaces

(PARTITION p1 VALUES LESS THAN (1000),

PARTITION p2 VALUES LESS THAN (2000),

PARTITION p3 VALUES LESS THAN (MAXVALUE));

8. GET THE SQL ISSUED AGAINST THE DATABASE:

-- Could take a lot of performance, depending on uptime and activity.

-- Sort of auditing. You get the sql statements.

-- Important: First try this query on a test system.

-- set linesize 70

-- set pagesize 100

-- set trimspool on

-- spool /tmp/sql.log

select v.sql_text, v.FIRST_LOAD_TIME, v.PARSING_SCHEMA_ID, v.DISK_READS, v.ROWS_PROCESSED, v.CPU_TIME,

b.username from

v$sqlarea v, dba_users b

where v.FIRST_LOAD_TIME > '2010-03-15'

and v.PARSING_SCHEMA_ID=b.user_id

order by v.FIRST_LOAD_TIME ;

9. GET THE SGA PROPERTIES:

-- Oracle background processes:

SQL> SELECT paddr, name, substr(description,1,40) FROM v$bgprocess;

SQL> SELECT pid, spid, program, background FROM v$process WHERE BACKGROUND=1;

-- All processes:

SQL> SELECT SID,SERIAL#,USERNAME,COMMAND,PROCESS,MODULE,PROGRAM FROM v$session;

-- SGA properties:

SELECT * FROM v$sga;

SELECT * FROM v$sgastat;

SELECT * FROM v$pgastat; -- PGA properties

SELECT * FROM v$memory_target_advice ORDER BY memory_size;

SELECT SUBSTR(COMPONENT,1,20), CURRENT_SIZE, MIN_SIZE, MAX_SIZE, USER_SPECIFIED_SIZE from V$MEMORY_DYNAMIC_COMPONENTS;

SELECT sum(bytes) FROM v$sgastat WHERE pool in ('shared pool', 'java pool', 'large pool');

SELECT (1-(pr.value/(dbg.value+cg.value)))*100

FROM v$sysstat pr, v$sysstat dbg, v$sysstat cg

WHERE pr.name = 'physical reads'

AND dbg.name = 'db block gets'

AND cg.name = 'consistent gets';

SELECT * FROM v$sgastat

WHERE name = 'free memory';

SELECT gethits,gets,gethitratio FROM v$librarycache

WHERE namespace = 'SQL AREA';

SELECT substr(sql_text,1,40) "SQL",

count(*) ,

sum(executions) "TotExecs"

FROM v$sqlarea

WHERE executions < 5

GROUP BY substr(sql_text,1,40)

HAVING count(*) > 30

ORDER BY 2;

SELECT SUM(PINS) "EXECUTIONS", SUM(RELOADS) "CACHE MISSES WHILE EXECUTING"

FROM V$LIBRARYCACHE;

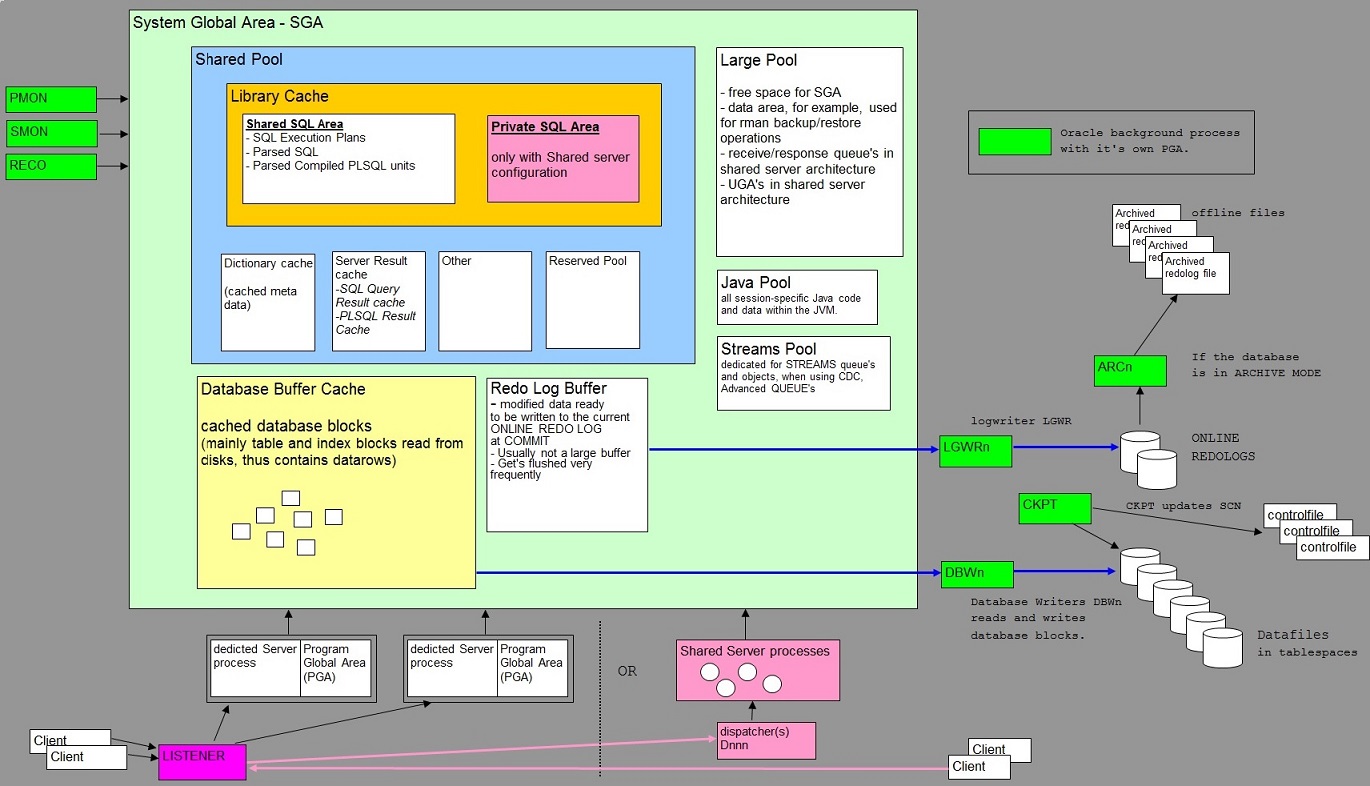

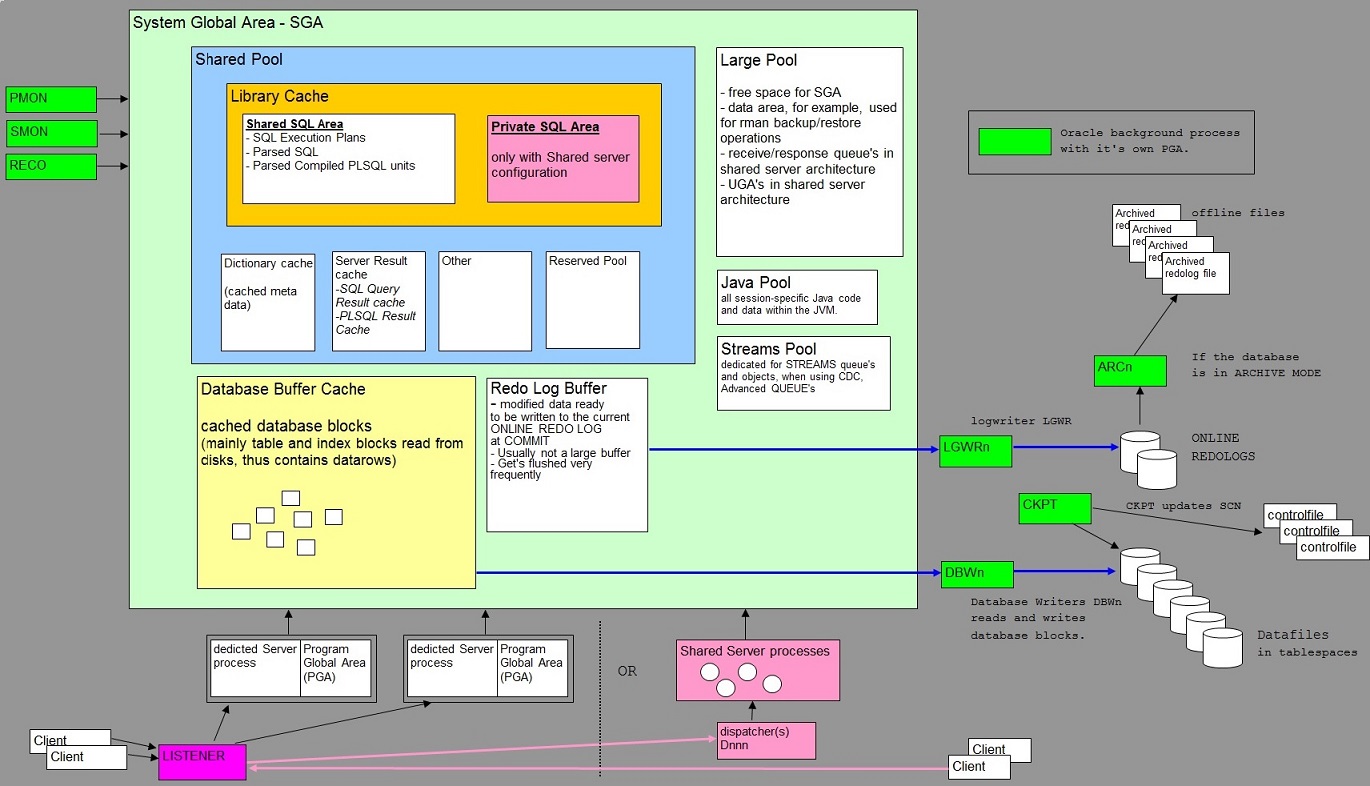

See also section 33 for an illustration of the memory structures and processes.

10. CREATE AN "SPFILE.ORA" FROM AN "INIT.ORA" AND THE OTHER WAY AROUND:

-- init.ora: traditional ascii format startup configuration file.

-- spfile.ora: binary format startup configuration file.

-- Both can be used to start the instance. However, preferred is to use the spfile.ora.

-- The actual (default) init.ora/spfile.ora file, will use the instance name in it's filename, like initSALES.ora

CREATE SPFILE='/opt/oracle/product/10.2/dbs/spfileSALES.ora'

FROM PFILE='/opt/oracle/product/10.2/admin/scripts/init.ora';

CREATE SPFILE='/opt/app/oracle/product/9.2/dbs/spfilePEGACC.ora'

FROM PFILE='/opt/app/oracle/admin/PEGACC/scripts/init.ora';

CREATE PFILE='/opt/oracle/product/10.2/admin/scripts/init.ora'

FROM SPFILE='/opt/oracle/product/10.2/dbs/spfileSALES.ora';

In addition, in Oracle 11g, you can create a pfile (init.ora) file, just from the current settings from memory:

CREATE PFILE='/apps/oracle/product/11.1/dbs/init_prod.ora' FROM MEMORY;

11. CREATE A COPY TABLE WITH ALL DATA:

-- The CTAS method or "CREATE TABLE AS SELECT" method, allows you to create an exact copy table

-- from an original table, with the same columns and datatypes, and all rows,

-- but excluding the indexes and constraints.

-- This new table will be created "on the fly", so it should not exist beforehand.

-- Example CTAS method:

CREATE TABLE EMPLOYEE2

AS SELECT * FROM EMPLOYEE;

-- One alternative method is:

-- Obtain the create script of the original table (e.g. using toad).

-- Create the new empty table using an other tablename, using that script.

-- Then use:

INSERT INTO NEW_TABLE

SELECT * FROM SOURCE_TABLE;

12. A FEW SIMPLE WAYS TO TRACE A SESSION:

-- This section ONLY lists the very very basics on tracing.

-- "Old fashion" traces in 9i/10g/11g, uses the Session Identifier (SID) and optionally other parameters like

-- the Serial number (serial#), to distinguish between all sessions in the database, and accordingly trace

-- that specific session.

-- Modern methods allows you to establish a client identifier, or let's you monitor on a module,

-- so you are not perse "tied" to the SID anymore.

-- From v$session you can find the username, osuser, SID, SERIAL#, program, and module (if needed).

-- Like for example (see section 3 for more info):

--

-- select sid, serial#, username from v$session;

-- select sid, serial#, username, module from v$session;

--

-- This will identify the Oracle SID with the username (and optionally the OS user and other).

-- If you know a characteristic program, or module (like sqlplus), or user, the SID (Session ID) can be found.

-- Especially the "module" field in v$session identifies a certain client program like "sqlplus.exe" or "nav.exe" etc..

-- But maybe, already a unique username is sufficient to identify the SID and SERIAL# in your situation.

-- In most methods, the 9i, 10g traces will be stored in the USER_DUMP_DEST directory (udump).

12.1 TRACING ON SESSION ID (SID)

1. Using the DBMS_MONITOR.SESSION_TRACE_ENABLE() procedure:

Example: Suppose you want to trace Session 75 with serial# 4421:

exec DBMS_MONITOR.SESSION_TRACE_ENABLE(75,4421);

To disable tracing specified in the previous step:

exec DBMS_MONITOR.SESSION_TRACE_DISABLE(75,4421);

Since almost always the session is qualified "enough" by the SID alone, you can use this as well:

exec DBMS_MONITOR.SESSION_TRACE_ENABLE(75);

Tracing your session can be done using:

exec DBMS_MONITOR.SESSION_TRACE_ENABLE();

or

exec DBMS_MONITOR.SESSION_TRACE_ENABLE(NULL, NULL);

2. Using the DBMS_SYSTEM.SET_EV() procedure:

Here, You need to know which "event" number you want to trace on, and the level thereoff.

For a performance related trace, event 10046 with level 8 (or 12) might be a good choice.

Be aware that these traces produce quick growing trace files (especially level 12).

Next, you need the SID and SERIAL# of the session you want to trace.

The trace information will be written to user_dump_dest.

Example:

Start the trace on Session 177 (with serial# 55235):

exec sys.dbms_system.set_ev(177,55235,10046,8,'');

Stop the trace on Session 177 (with serial# 55235):

exec sys.dbms_system.set_ev(177,55235,10046,0,'');

3. Using the DBMS_System.Set_Sql_Trace_In_Session() procedure:

A "quite old" and well known other procedure that can be used to trace a Session, is the

DBMS_System.Set_Sql_Trace_In_Session(SID, SERIAL#,true|false) procedure.

Example:

Turn SQL tracing on in session 448. The trace information will get written to user_dump_dest.

exec dbms_system.set_sql_trace_in_session(448,2288,TRUE);

Turn SQL tracing off in session 448

exec dbms_system.set_sql_trace_in_session(448,2288,FALSE);

12.2 TRACING ON OTHER IDENTIFIERS:

Instead of tracing on a known SID, to be able to trace on other "identifiers" is a much wanted feature.

As of 10g, the tracing facility has been greatly expanded.

New v$ "views" were added, and existing v$ "views" has been expanded to facilitate the new tracing methods.

This sub section will hold for 10g/11g.

Again let's take a look at DBMS_MONITOR again.

Suppose we want to track a program that connects to a RAC cluster. Now, the discussion is not much different

in using a standallone instance. Only, you probably know that the v$ views are specific for an instance,

while the gv$ views are "global" for all the instances in RAC.

The DBMS_MONITOR.serv_mod_act_trace_enable() method allows you to set the tracing on for sessions matching a

module, action, or other usable field in gv$session (or v$session).

So, suppose we want to generate traces for all SQL*plus sessions that connect to the cluster (RACDEV1) from any instance,

we could issue the following command:

BEGIN

DBMS_MONITOR.serv_mod_act_trace_enable

(service_name => 'RACDEV1',

module_name => 'SQL*Plus',

action_name => DBMS_MONITOR.all_actions,

waits => TRUE,

binds => FALSE,

instance_name => NULL

);

END;

/

13. A FEW SIMPLE WAYS TO DETECT WAITS:

-- This section ONLY lists the very very basics on waits.

-- set linesize 1000

-- set pagesize 1000

-- see for yourself where to use substr(field,start,lenght)

13.1 HOW TO IDENTIFY THE FILES WITH HIGHEST ACTIVITY:

-- query on v$filestat, dba_data_files:

SELECT v.PHYRDS, v.PHYWRTS, d.TABLESPACE_NAME, d.FILE_NAME

FROM V$FILESTAT v, DBA_DATA_FILES d

WHERE v.FILE#=d.FILE_ID;

13.2 HOW TO IDENTIFY ACTIVITY ON CONTROLFILES:

SELECT * FROM v$system_event

WHERE event LIKE '%control%' ;

13.3 HOW TO IDENTIFY WAITS OF SESSIONS OR WITH HIGH IO:

SELECT s.SID,v.username,v.osuser,v.command,s.BLOCK_GETS,s.PHYSICAL_READS,s.BLOCK_CHANGES,substr(v.module,1,30)

FROM v$sess_io s, v$session v

where v.sid=s.sid;

SELECT SID,OPNAME,SOFAR,TOTALWORK,START_TIME,LAST_UPDATE_TIME,TIME_REMAINING,MESSAGE

FROM v$session_longops;

-- identify SID's and the objects (or file#, block#) which are involved in waits.

SELECT SID, event,p1text,p1,p2text,p2

FROM v$session_wait

WHERE event LIKE 'db file%'

AND state = 'WAITING';

-- The p1 and p2 might identify the file# and block# of the object(s).

-- Determine the object as follows: suppose you found p1=5 and p2=1178

select segment_name, segment_type

from dba_extents

where file_id = 5 and 1178 between (block_id and block_id + blocks – 1);

13.4 OVERALL WAITS:

select

event,

total_waits,

time_waited / 100,

total_timeouts,

average_wait/100

from

v$system_event

where -- list of not too interresting events

event not in (

'dispatcher timer',

'lock element cleanup',

'Null event',

'parallel query dequeue wait',

'parallel query idle wait - Slaves',

'pipe get',

'PL/SQL lock timer',

'pmon timer',

'rdbms ipc message',

'slave wait',

'smon timer',

'SQL*Net break/reset to client',

'SQL*Net message from client',

'SQL*Net message to client',

'SQL*Net more data to client',

'virtual circuit status',

'WMON goes to sleep'

);

SELECT event, total_waits, total_timeouts, time_waited, average_wait

FROM v$system_event order by time_waited;

SELECT NAME, VALUE from v$sysstat

where name like '%db%' or name like '%block%'

or name like '%log%' or name like '%cons%'

or name like '%undo%' or name like '%write%' or name like '%read%';

13.5 MORE ON V$SESSION_LONGOPS:

Long running statements are also registered in v$session_longops.

Especially cute, are fields like "sofar"(work already done), "totalwork", and ofcourse

identifiers of the session and user.

Here are some well-known examples which almost everybody uses once in a while:

Example 1:

select * from

(

select

opname,

start_time,

target,

sofar,

totalwork,

units,

elapsed_seconds,

message

from

v$session_longops

order by start_time desc

)

where rownum <=1;

Example 2:

select

round(sofar/totalwork*100,2) percent_completed,

v$session_longops.*

from

v$session_longops

where

sofar <> totalwork

order by

target, sid;

Example 3:

Select 'long', to_char (l.sid), to_char (l.serial#), to_char(l.sofar), to_char(l.totalwork), to_char(l.start_time, 'DD-Mon-YYYY HH24:MI:SS' ),

to_char ( l.last_update_time , 'DD-Mon-YYYY HH24:MI:SS'), to_char(l.time_remaining), to_char(l.elapsed_seconds),

l.opname,l.target,l.target_desc,l.message,s.username,s.osuser,s.lockwait from v$session_longops l, v$session s

where l.sid = s.sid and l.serial# = s.serial#;

Select 'long', to_char (l.sid), to_char (l.serial#), to_char(l.sofar), to_char(l.totalwork), to_char(l.start_time, 'DD-Mon-YYYY HH24:MI:SS' ),

to_char ( l.last_update_time , 'DD-Mon-YYYY HH24:MI:SS'), s.username,s.osuser,s.lockwait from v$session_longops l, v$session s

where l.sid = s.sid and l.serial# = s.serial#;

14. CREATE A DATABASE USER:

-- EXAMPLE STANDARD DATABASE USER:

CREATE USER albert identified by albert

DEFAULT TABLESPACE SALESDATA -- salesdata is a tablespace

TEMPORARY TABLESPACE TEMP

QUOTA 100M ON SALESDATA

QUOTA 20M ON USERS

;

-- GRANT standard roles:

GRANT connect TO albert;

GRANT resource TO albert;

-- GRANT specific privileges:

GRANT create trigger TO albert;

GRANT create sequence TO albert;

GRANT create procedure TO albert;

-- DROP the user:

DROP USER albert cascade;

-- EXAMPLE (external) USER:

CREATE USER global_user

IDENTIFIED GLOBALLY AS 'CN=jjones, OU=sales, O=antapex, C=NL'

DEFAULT TABLESPACE users

QUOTA 500M ON users;

-- EXAMPLE (Windows AD authenticed user) USER:

Suppose we have the account Harry01 from the XYZ domain. For access to Oracle, we can create

the following registration, which does not need to logon to Oracle.

CREATE USER "OPS$XYZ\Harry01"

IDENTIFIED EXTERNALLY

DEFAULT TABLESPACE SALES_DATA

TEMPORARY TABLESPACE TEMP

PROFILE DEFAULT

ACCOUNT UNLOCK

QUOTA 100M ON SALES_DATA

GRANT CONNECT TO "OPS$XYZ\Harry01";

GRANT READ_SALES TO "OPS$XYZ\Harry01";

GRANT RESOURCE TO "OPS$XYZ\Harry01";

GRANT SELECT_CATALOG_ROLE TO "OPS$XYZ\Harry01";

GRANT ADMINISTER DATABASE TRIGGER TO "OPS$XYZ\Harry01";

GRANT ALTER USER TO "OPS$XYZ\Harry01";

GRANT CREATE INDEXTYPE TO "OPS$XYZ\Harry01";

GRANT CREATE LIBRARY TO "OPS$XYZ\Harry01";

GRANT CREATE OPERATOR TO "OPS$XYZ\Harry01";

GRANT CREATE PROCEDURE TO "OPS$XYZ\Harry01";

GRANT CREATE PUBLIC SYNONYM TO "OPS$XYZ\Harry01";

GRANT CREATE SEQUENCE TO "OPS$XYZ\Harry01";

GRANT CREATE SESSION TO "OPS$XYZ\Harry01";

GRANT CREATE TABLE TO "OPS$XYZ\Harry01";

GRANT CREATE TRIGGER TO "OPS$XYZ\Harry01";

GRANT CREATE TYPE TO "OPS$XYZ\Harry01";

GRANT CREATE VIEW TO "OPS$XYZ\Harry01";

GRANT DROP PUBLIC SYNONYM TO "OPS$XYZ\Harry01";

GRANT UNLIMITED TABLESPACE TO "OPS$XYZ\Harry01";

15. FINDING INVALID OBJECTS AND REPAIR:

-- Finding invalid objects:

SELECT owner, substr(object_name, 1, 30), object_type, created,

last_ddl_time, status

FROM dba_objects

WHERE status='INVALID';

-- Recompile packages:

SELECT 'ALTER '||decode( object_type,

'PACKAGE SPECIFICATION'

,'PACKAGE'

,'PACKAGE BODY'

,'PACKAGE'

,object_type)

||' '||owner

||'.'|| object_name ||' COMPILE '

||decode( object_type,

'PACKAGE SPECIFICATION'

,'SPECIFACTION'

,'PACKAGE BODY'

,'BODY'

, NULL) ||';'

FROM dba_objects WHERE status = 'INVALID';

-- Using DBMS_UTILITY.compile_schema to compile all objects in a schema:

Example:

exec DBMS_UTILITY.compile_schema('HARRY');

-- Manually recompile objects like views, triggers etc..:

ALTER PACKAGE my_package COMPILE;

ALTER PACKAGE my_package COMPILE BODY;

ALTER PROCEDURE my_procedure COMPILE;

ALTER FUNCTION my_function COMPILE;

ALTER TRIGGER my_trigger COMPILE;

ALTER VIEW my_view COMPILE;

16. CREATING AND REBUILDING INDEXES:

16.1 EXAMPLES ON HOW TO CREATE INDEXES:

-- Examples of ordinary index:

CREATE INDEX indx_cust_id ON CUSTOMERS(cust_id);

CREATE INDEX indx_cust_id ON CUSTOMERS(cust_id) nologging;

CREATE INDEX indx_cust_id ON CUSTOMERS(cust_id) TABLESPACE SALES_INDEX01;

CREATE INDEX index_employees ON EMPLOYEES(last_name, job_id, salary); -- multiple columns

-- Some special types of indexes:

-- reverse key:

CREATE INDEX indx_r_name ON RESTAURANTS(r_name) REVERSE;

-- bitmap index:

CREATE BITMAP INDEX indx_gender ON EMPLOYEE (gender) TABLESPACE EMPDATA;

-- function based index:

CREATE INDEX emp_total_sal_idx ON employees (12 * salary * commission_pct, salary, commission_pct);

16.2 EXAMPLES ON HOW TO REBUILD INDEXES:

-- Note that rebuilding large, or many, indexes, will generate, or add, to redo logging as well.

-- Therefore, in some cases the NOLOGGING keyword maybe of help.

-- Also, in case of very large, or a very large number of big indexes, rebuilding will be a major task.

-- This note is not about the best practises on when to rebuild indexes.

Examples:

alter index HARRY.EMPNO_INDEX rebuild;

alter index HARRY.EMPNO_INDEX rebuild nologging;

alter index HARRY.EMPNO_INDEX rebuild tablespace SALES_INDEX_02; -- rebuild to another tablespace

-- Create a list of rebuild index statements:

SELECT 'ALTER INDEX HARRY.'||index_name||' REBUILD;' from dba_indexes

where owner='HARRY';

17. GETTING PRODUCT/PARAMETER INFORMATION:

17.1 OPTIONS, VERSION, FEATURES:

SELECT * FROM V$VERSION;

SELECT * FROM V$OPTION;

SELECT * FROM V$LICENSE;

SELECT * FROM PRODUCT_COMPONENT_VERSION;

17.2 COLLATION:

SELECT * FROM NLS_DATABASE_PARAMETERS;

SELECT * FROM NLS_SESSION_PARAMETERS;

SELECT * FROM NLS_INSTANCE_PARAMETERS;

17.3 PARAMETERS/OPTIONS:

SELECT * FROM DBA_REGISTRY;

SELECT * FROM v$parameter;

18. KILLING AN ORACLE SESSION:

18.1 Single instance:

From v$session, you can obtain the Oracle Session ID (sid) and serial#.

If a Oracle session must be "killed", you can use the following ALTER SYSTEM command:

ALTER SYSTEM KILL SESSION 'sid, serial#';

Example:

ALTER SYSTEM KILL SESSION '77,285';

The above statement does not use brutal force to end the session, if currectly transactions are

associated with that session. So, it might show up as having a status of "marked for kill".

18.2 Cluster:

In a RAC cluster environment, a third parameter parameter should be added, which is the instance ID:

ALTER SYSTEM KILL SESSION 'sid, serial#,@inst_id';

18.3 Additional clauses:

A few additional clauses can be used with the ALTER SYSTEM KILL SESSION statement, like for example:

ALTER SYSTEM KILL SESSION 'sid, serial#' IMMEDIATE;

The above command, will terminate the session and possible ongoing transactions will roll back.

18.4 Killing OS processes:

If you have identified the "process id" of the OS process which is associated to the Oracle session,

you might consider "killing" the process from the OS prompt.

Example on Windows:

C:\> orakill SALES 22389

Example on Unix/Linux:

% kill -9 55827

19. 9i,10g,11g INIT.ORA/SPFILE.ORA parameters:

19.1 ABOUT THE SPFILE/INIT.ORA FILES:

The "spfile.ora" (or "init.ora") instance startup configuration file, determines in a large way,

how the instance will be configured. Think of the size of SGA memory, how many processes are allowed,

location of controlfiles, location of archived redologs etc.. etc..

As of 9i, a (binary) spfile.ora is used. However, it's still possible to start a 9i/10g/11g instance

using a tradional (ascii) init.ora file.

If at a closed instance, you would do this:

SQL> connect / as sysdba

SQL> startup

Then the default spfile.ora would be used.

But, at a closed instance, if you would do this:

SQL> connect / as sysdba

SQL> startup mount pfile=/apps/oracle/product/10.2/admin/test10g/pfile/init.ora

SQL> alter database open;

Then that specific init.ora would be used to start and configure the instance.

Since the spfile.ora is not ascii, it's not easy to view the file directly

.

In section 10, we showed how to create an ascii init.ora file from an spfile.

One small advantage from an init.ora, is that it is easy to view it with any editor, or just with shell commands.

Here are a few examples again:

CREATE SPFILE='/opt/oracle/product/10.2/dbs/spfileSALES.ora'

FROM PFILE='/opt/oracle/product/10.2/admin/scripts/init.ora';

CREATE SPFILE='/opt/app/oracle/product/9.2/dbs/spfilePEGACC.ora'

FROM PFILE='/opt/app/oracle/admin/PEGACC/scripts/init.ora';

For viewing settings from the SQL> prompt, you can use the "show parameter" command, like so:

SQL> show parameter spfile

spfile string C:\ORACLE\PRODUCT\10.2\DB_1\DATABASE\SPFILETEST10G.ORA

So, that shows you the location of the spfile itself.

SQL> show parameter sga

NAME TYPE VALUE

lock_sga boolean FALSE

pre_page_sga boolean FALSE

sga_max_size big integer 280M

sga_target big integer 280M

So, the upper command shows you SGA (shared memory) related settings.

19.2 CLUSTER AND INSTANCE SPECIFIC PARAMETERS:

On a simple 10g test database, I created a init.ora file (see section 10), and a small partial section is shown below:

*.db_recovery_file_dest_size=2147483648

*.dispatchers='(PROTOCOL=TCP) (SERVICE=test10gXDB)'

*.job_queue_processes=10

*.open_cursors=300

*.pga_aggregate_target=96468992

*.processes=150

test10g.__db_cache_size=167772160

test10g.__java_pool_size=16777216

test10g.__large_pool_size=4194304

What's typical for 10g/11g, is that for the "*." settings, it means it's in effect for all instances in a cluster.

It;s there even if you just use a stand allone instance.

The records that are like "instance_name.setting", it means that it is in effect for that instance only.

So, If an initialization parameter applies to all instances, use *.parameter notation, otherwise

prefix the parameter with the name of the instance.

For example:

Assume that you start the instance "prod1" (in a cluster) with an SPFILE containing the following entries:

*.OPEN_CURSORS=500

prod1.OPEN_CURSORS=1000

Then OPEN_CURSORS=1000 is in effect only for the instance prod1.

19.3 EXAMPLE 10g/11g INIT.ORA, OR SPFILE.ORA:

-Example 1:

###########################################

# Cache and I/O

###########################################

db_block_size=8192

db_file_multiblock_read_count=16

###########################################

# Cursors and Library Cache

###########################################

open_cursors=300

###########################################

# Database Identification

###########################################

db_domain=antapex.org

db_name=test10g

###########################################

# Diagnostics and Statistics

###########################################

# Diagnostic locations (logfiles etc..) 10g parameters:

background_dump_dest=C:\oracle/admin/test10g/bdump

core_dump_dest=C:\oracle/admin/test10g/cdump

user_dump_dest=C:\oracle/admin/test10g/udump

# Diagnostic locations (logfiles etc..) 11g parameters:

DIAGNOSTIC_DEST=C:\oracle\

###########################################

# File Configuration

###########################################

control_files=("C:\oracle\oradata\test10g\control01.ctl", "C:\oracle\oradata\test10g\control02.ctl", "C:\oracle\oradata\test10g\control03.ctl")

db_recovery_file_dest=C:\oracle/flash_recovery_area

db_recovery_file_dest_size=2147483648

###########################################

# Job Queues

###########################################

job_queue_processes=10

###########################################

# Miscellaneous

###########################################

# 10g example:

# compatible=10.2.0.1.0

# 11g example:

compatible=11.1.0.0.0

###########################################

# Processes and Sessions

###########################################

processes=350

###########################################

# Memory

###########################################

# Example 10g setting:

sga_target=287309824

# Example 11g setting:

memory_target=287309824

###########################################

# Security and Auditing

###########################################

audit_file_dest=C:\oracle/admin/test10g/adump

remote_login_passwordfile=EXCLUSIVE

###########################################

# Shared Server

###########################################

dispatchers="(PROTOCOL=TCP) (SERVICE=test10gXDB)"

###########################################

# Sort, Hash Joins, Bitmap Indexes

###########################################

pga_aggregate_target=95420416

###########################################

# System Managed Undo and Rollback Segments

###########################################

undo_management=AUTO

undo_tablespace=UNDOTBS1

###########################################

# Archive Mode:

###########################################

LOG_ARCHIVE_DEST_1=c:\oracle\oradata\archlog

LOG_ARCHIVE_FORMAT='arch_%t_%s_%r.dbf'

- Example 2: Exported 11g spfile to an ascii init file

test11g.__db_cache_size=281018368

test11g.__java_pool_size=12582912

test11g.__large_pool_size=4194304

test11g.__oracle_base='c:\oracle' #ORACLE_BASE set from environment

test11g.__pga_aggregate_target=322961408

test11g.__sga_target=536870912

test11g.__shared_io_pool_size=0

test11g.__shared_pool_size=230686720

test11g.__streams_pool_size=0

*.audit_file_dest='c:\oracle\admin\test11g\adump'

*.audit_trail='db'

*.compatible='11.1.0.0.0'

*.control_files='c:\oradata\test11g\control01.ctl','c:\oradata\test11g\control02.ctl','c:\oradata\test11g\control03.ctl'

*.db_block_size=8192

*.db_domain='antapex.nl'

*.db_name='test11g'

*.db_recovery_file_dest='c:\oracle\flash_recovery_area'

*.db_recovery_file_dest_size=2147483648

*.diagnostic_dest='c:\oracle'

*.dispatchers='(PROTOCOL=TCP) (SERVICE=test11gXDB)'

*.memory_target=857735168

*.open_cursors=300

*.processes=350

*.remote_login_passwordfile='EXCLUSIVE'

*.undo_tablespace='UNDOTBS1'

19.4 IMPORTANT SPFILE/INIT PARAMETERS:

Let's review some of the most important init.ora parameters.

19.4.1. Parameters related to Oracle Managed Files OMF:

DB_CREATE_FILE_DEST = directory | ASM disk group

DB_CREATE_ONLINE_LOG_DEST_n = directory | ASM disk group

DB_CREATE_FILE_DEST specifies the default location for Oracle-managed datafiles.

This location is also used as the default location for Oracle-managed control files

and online redo logs if none of the DB_CREATE_ONLINE_LOG_DEST_n initialization parameters are specified.

DB_CREATE_ONLINE_LOG_DEST_n (where n = 1, 2, 3, ... 5) specifies the default location

for Oracle-managed control files and online redo logs.

If more than one DB_CREATE_ONLINE_LOG_DEST_n parameter is specified, then the control file and

online redo log is multiplexed across the locations of the other DB_CREATE_ONLINE_LOG_DEST_n parameters.

One member of each online redo log is created in each location, and one control file is created in each location.

Example:

DB_CREATE_FILE_DEST = '/u01/oracle/test10g'

DB_CREATE_ONLINE_LOG_DEST_1= '/u02/oracle/test10g'

DB_CREATE_ONLINE_LOG_DEST_2= '/u03/oracle/test10g'

19.4.2. Parameters related to the FLASH RECOVERY AREA (10g / 11gR1) or FAST RECOVERY AREA (11gR2)

In 11gR2, the "FLASH RECOVERY AREA" is renamed to "FAST RECOVERY AREA".

A flash recovery area is a location in which Oracle Database can store and manage files

related to backup and recovery. It is distinct from the database area.

Two parameters define the "FLASH RECOVERY AREA" or "FAST RECOVERY AREA":

You specify a flash recovery area with the following initialization parameters:

DB_RECOVERY_FILE_DEST (= location on filesystem or ASM)

DB_RECOVERY_FILE_DEST_SIZE (size reserved for DB_RECOVERY_FILE_DEST)

DB_RECOVERY_FILE_DEST specifies the default location for the flash recovery area. The flash recovery area contains

archived redo logs, flashback logs, and RMAN backups.

The DB_RECOVERY_FILE_DEST parameter makes sure that all flashback logs, RMAN backups, archived logs,

are under the control of the Instance.

Specifying this parameter without also specifying the DB_RECOVERY_FILE_DEST_SIZE initialization parameter is not allowed.

DB_RECOVERY_FILE_DEST_SIZE specifies (in bytes) the hard limit on the total space to be used

by target database recovery files created in the flash recovery area.

You cannot enable these parameters if you have set values for the LOG_ARCHIVE_DEST and

LOG_ARCHIVE_DUPLEX_DEST parameters. You must disable those parameters before setting up

the flash recovery area. You can instead set values for the

LOG_ARCHIVE_DEST_n parameters. If you do not set values for local LOG_ARCHIVE_DEST_n,

then setting up the flash recovery area will implicitly set LOG_ARCHIVE_DEST_10 to the flash recovery area.

Oracle recommends using a flash recovery area, because it can simplify backup and recovery operations for your database.

You may also set the DB_FLASHBACK_RETENTION_TARGET parameter.

This specifies in minutes how far back you can "flashback" the database, using the socalled "Flashback" framework.

How far back one can actually "flashback" the database, depends on how much flashback data

Oracle has kept in the recovery area.

Example:

db_recovery_file_dest='c:\oracle\flash_recovery_area'

db_recovery_file_dest_size=2147483648

19.4.3. Parameters related to Automatic Diagnostic Repository ADR:

This is for 11g only.

Oracle 9i and 10g uses the well known locations for the alert.log and trace files, which are specified by:

BACKGROUND_DUMP_DESTINATION, USER_DUMP_DESTINATION and CORE_DUMP_DESTINATION.

ADR is defined by the DIAGNOSTIC_DEST parameter, which specifies a location on the filesystem.

ADR is new in 11g, and is partly XML based. The logging (alert.log) and traces are part of ADR.

DIAGNOSTIC_DEST = { pathname | directory }

As of Oracle 11g Release 1, the diagnostics for each database instance are located in a dedicated directory,

which can be specified through the DIAGNOSTIC_DEST initialization parameter.

This location is known as the Automatic Diagnostic Repository (ADR) Home. For example, if the database name is proddb

and the instance name is proddb1, the ADR home directory would be "$DIAGNOSTIC_DEST/diag/rdbms/proddb/proddb1".

So, if the DIAGNOSTIC_DEST was placed to "C:\ORACLE", you would find the new style XML alert.log "log.xml" in, for example,

"C:\oracle\diag\rdbms\test11g\test11g\alert\log.xml" for the test11g instance.

The old plain text alert.log is still available in:

"C:\oracle\diag\rdbms\test11g\test11g\trace\alert_test11g.log"

19.4.4. Parameters related to DATABASE NAME AND DOMAIN:

The databasename, and the domain where it "resides", are defined by the parameters:

DB_NAME

DB_DOMAIN

The DB_NAME initialization parameter determines the local name component of the database name,

the DB_DOMAIN parameter, which is optional, indicates the domain (logical location) within a

network structure. The combination of the settings for these two parameters must

form a database name that is unique within a network.

For example, a database with a global database name of "test10g.antapex.org",

you would have the parameters like so:

DB_NAME = test10g

DB_DOMAIN = antapex.org

19.4.5. Parameters related to PROCESSES AND SESSIONS:

PROCESSES=max number of concurrent OS processes which can connect to the database.

SESSIONS=specifies the maximum number of sessions that can be created in the database.

Example:

PROCESSES=500

The PROCESSES initialization parameter determines the maximum number of

operating system processes that can be connected to Oracle Database concurrently.

The value of this parameter must be a minimum of one for each background process plus

one for each user process. The number of background processes will vary according

the database features that you are using. For example, if you are using Advanced

Queuing or the file mapping feature, you will have additional background processes.

If you are using Automatic Storage Management, then add three additional processes

for the database instance.

SESSIONS specifies the maximum number of sessions that can be created in the system.

Because every login requires a session, this parameter effectively determines the maximum number of

concurrent users in the system. You should always set this parameter explicitly to a value equivalent

to your estimate of the maximum number of concurrent users, plus the number of background processes,

plus approximately 10% for recursive sessions.

Note: it would not be a very good idea to specify the SESSION= parameter

which is lower than the PROCESSES parameter.

The default no of sessions: (1.1 * PROCESSES) + 5

19.4.6. Parameters related to MEMORY and SGA:

Memory = SGA memory (like buffer cache + all pools) + All server processes and background processes PGA's

- 11g: (Full) Automatic Memory Management = AMM -> by using parameter "MEMORY_TARGET="

- 10g/11g: Automatic Shared Memory Management = ASMM -> by using parameter"SGA_TARGET="

or

- 11g/10g/9i: Manual Memory Management, where you can specify all the individual buffers and poolsizes + pga's.

So, 11g AMM (is Total memory management) "is more" automatic than 11g/10g ASMM (auto SGA management) which is more automatic

than manual configuration of 11g/10g/9i cache, pools and pga's.

=> For 11g and 10g, it is possible to use the SGA_TARGET parameter.

"SGA_TARGET=amount_of_memory" is actually a single parameter for the total SGA size under Oracle control, where

automatically all SGA components (buffer cache and all pools) are sized as needed

.

When using SGA_TARGET=, then you do not need to specify all individual area's like:

DB_CACHE_SIZE (DEFAULT buffer pool)

SHARED_POOL_SIZE (Shared Pool)

LARGE_POOL_SIZE (Large Pool)

JAVA_POOL_SIZE (Java Pool)

=> For 11g, it goes a step further, and it is possible to use the MEMORY_TARGET parameter.

"MEMORY_TARGET=amount_of_memory" controls all memory automatically (SGA with all pools and buffers, and all pga's).

While it is better to use MEMORY_TARGET in 11g, you can still use the SGA_TARGET parameter to control the SGA only.

Also, it's still possible to manually configure all memory as you see fit, by using all individual parameters

like "SHARED_POOL_SIZE=", "DB_CACHE_SIZE=" etc..

MEMORY_TARGET and SGA_TARGET will perform automatic memory management (memory_target for all memory, sga_target for the SGA)

Both parameters can be used in conjunction with a hard upper limit for the total memory that can be used.

These are: memory_max_target and sga_max_size.

So, the following parameters might be seen as being set in an spfile.ora/init.ora:

11g:

MEMORY_TARGET=

MEMORY_MAX_TARGET=

10g and 11g:

SGA_TARGET=

SGA_MAX_SIZE=

Prior to Oracle 11g, you could set the sga_target and sga_max_size parameters, allowing Oracle to allocate

and re-allocate RAM within the SGA. The PGA was independent from this, and was set by the pga_aggregate_target parameter.

In Oracle 11g you may use the memory_max_target parameter which determines the total maximum RAM for both the PGA and SGA area's

The new MEMORY-TARGET parameter, "targets" for the set size, and even allows RAM to be "stealed" from the SGA

and transferred to the PGA, or the other way around.

19.4.7. Parameters related to ADDM and AWR:

Oracle Diagnostics Pack 11g and 10g includes a self-diagnostic engine built right into the

Oracle Database 11g kernel, called the "Automatic Database Diagnostic Monitor", or ADDM.

To enable ADDM to accurately diagnose performance problems, it is important that

it has detailed knowledge of database activities and the workload the database is

supporting. Oracle Diagnostics Pack 11g (and 10g), therefore, includes a built in repository

within every Oracle 11g (and 10g) Database, called "Automatic Workload Repository (AWR)",

which contains operational statistics about that particular database and other relevant

information. At regular intervals (once an hour by default), the Database takes a

snapshot of all its vital statistics and workload information and stores them in AWR,

and retains the statistics in the workload repository for 8 days.

Also, by default, ADDM runs every hour to analyze snapshots taken by AWR during that period.

Note: for people familiar with older Oracle versions: ADDM and AWR resembles an strongly enhanced

and automatically implemented "STATSPACK".

So, ADDM examines and analyzes data captured in the Automatic Workload Repository (AWR) to determine

possible performance problems in Oracle Database. ADDM then locates the root causes of the performance problems,

provides recommendations for correcting them, and quantifies the expected benefits.

A key component of AWR, is "Active Session History (ASH)". ASH samples the

current state of all active sessions periodically and stores it in memory. The data

collected in memory can be accessed by system views. This sampled data is also

pushed into AWR every hour for the purposes of performance diagnostics.

For 11g, ADDM and AWR/ASH are part of the "Server Manageability Packs". In fact, the components are the following:

- The DIAGNOSTIC pack includes AWR and ADDM.

- The TUNING pack includes SQL Tuning Advisor, SQLAccess Advisor, and so on.

CONTROL_MANAGEMENT_PACK_ACCESS parameter:

The "CONTROL_MANAGEMENT_PACK_ACCESS" parameter determines which of the above components are "switched on".

CONTROL_MANAGEMENT_PACK_ACCESS = { NONE | DIAGNOSTIC | DIAGNOSTIC+TUNING }

Default: DIAGNOSTIC+TUNING

If set to NONE, the ADDM & AWR and TUNING pack, are switched off.

STATISTICS_LEVEL parameter:

STATISTICS_LEVEL specifies the level of collection for database and operating system statistics.

STATISTICS_LEVEL = { ALL | TYPICAL | BASIC }

Default: TYPICAL

Gathering database statistics using AWR is enabled by default and is controlled by the STATISTICS_LEVEL

initialization parameter.

The STATISTICS_LEVEL parameter should be set to TYPICAL or ALL to enable statistics gathering by AWR.

The default setting is TYPICAL.

Setting the STATISTICS_LEVEL parameter to BASIC disables many Oracle Database gathering statistics,

including AWR/ASH, and is not recommended unless you want to reserve as much as possible performance for

the applicative database processes.

20. DIAGNOSTIC/PERFORMANCE TOOLS IN 9i: A FEW WORDS ON STATSPACK:

For the 10g/11g ADDM and AWR implementation, or the successor for STATSPACK, please see section 29.

Although statspack is a typical 8i/9i Diagnostic tool, I still like to spend a few words on it.

For 10g and later, you are recommended to use the newer frameworks.

STATSPACK is a performance diagnostic tool, which is available since Oracle8i.

It's widely used in Oracle 8i and 9i environments. But, from 10g onwards, a renewed framework

was introduced (like for example ADDM). So, in 10g and 11g, ofcourse the newer Diagnostic tools are recommended.

Still, it's usefull to spend a few words on statspack, since it's so incredably easy to use.

And who knows.. maybe you want to use it on 10g as well.

However, correctly interpreting the reports, still requires a reasonable system and Oracle knowledge.

.

20.1 INSTALLING:

It's recommended to create a tablespace "PERFSTAT" first. Since the schema (owner) of the new

diagnostic tables, is the new user "perfstat", it's nice to keep all objects together in a easy

to indentify tablespace.

Next, from sqlplus, run the create script

"$ORACLE_HOME/rdbms/admin/statscre.sql" (Unix) or "%ORACLE_HOME%\rdbms\admin\statscre.sql" (Windows).

This script will ask a few simple questions like who should be the owner (the suggested owner is "perfstat")

and which tablespace you want to use to store perfstat's tables.

Although some additional configuration (after installation) can be done,like altering the "Collection Level",

you are now "basically" setup to create "snapshots" and create "reports".

20.2 A FEW WORDS ON HOW IT WORKS:

You know that there are quite a few dynamic system "views" (v$), which collect database wide statistics,

many of which are related to performance and wait events

Statspack will use a number of true permanent tables which has a one to one correspondence to that

set of v$ views (for example v$sysstat will have a corresponding stats$sysstat table)

Well it's almost one to one, because the statspack table will have some additional columns

of which (in this note) the "snap_id" column is the most interresting one.

When you "activate" statspack, you will create a "snapshot" of the database, meaning

that the set of v$ views are queried, and the results are stored in the "stats$" tables, where these

specific results are identified by a specific "SNAP_ID".

The next time you run statspack, a new SNAP_ID will identify these new measurements.

And so on.. and so on.

It is quite critical to your understanding of the STATSPACK utility that you realize that the information

captured by a STATSPACK snapshot are accumulated values, since most of the v$ views contain accumulated values.

This automatically means, that you only can compare two snapshots, to get meaningfull results.

Examples:

- You could create a snapshot early in the morning, and one late in the afternoon, and then analyze the results

to see how the database has performed this day.

- You could create a snapshot before a certain batch (or other program) runs, and then one when that batch has finished.

Note:

Since the v$ views are cumulative, the stats$ are thus too. But an instance shutdown will "clear" many v$ views,

thus creating a report using snapshots before and after a shutdown, will not generate valid results.

20.3 A FEW WORDS ON USING STATSPACK:

- Creating a snapshot:

Logon (using sqlplus) as perfstat and execute the 'statspack.snap' package.

SQL> execute statspack.snap;

- Creating a report:

The report will just be an ascii file, so it's easy to view the contents.

Log on as perfstat using sqlplus:

% sqlplus perfstat/perfstat

Then run the 'spreport.sql' script that lives in the "ORACLE_HOME/rdbms/admin" directory.

SQL> @?/rdbms/admin/spreport.sql

This script essentially asks three important questions:

- The begin SNAP_ID

- The end SNAP_ID

- And where you want to store the report with what name.

A short while later, you can study that report. The report will show you much information like

general database statistics, top consuming SQL statements, %cpu and duration per SQL etc..

20.4 OTHER NOTES:

- Since "execute statspack.snap" will create a snapshot, it's easy to schedule it from an OS scheduler.

But don't schedule it like "every ten minutes". That will not add any value. Once an hour, or a longer interval,

is recommended.

Also, if you need to analyze batches, it's more sane to schedule it before and after those batch(es).

- If you need to view the snap_id's and at which time they have run, use a query like:

select name,snap_id,to_char(snap_time,'DD.MM.YYYY:HH24:MI:SS')

"Date/Time" from stats$snapshot,v$database;

That information can be used to select the correct start snap_id and end snap_id for your report.

21. USING A CURSOR IN PL/SQL LOOPS:

We are going to illustrate the use of a "cursor" in PLSQL loops.

PLSQL has more "ways" to create loops, like for example using "FOR.." or "WHILE.." loops

But this section wants to give a few examples using a cursor.

In many occasions, you might view a cursor as a virtual table, because you often "declare" the cursor

as being a resultset from a query on one or more tables.

Assuming you work on a testsystem, let's create a testuser first, who will perform

a couple of examples.

CREATE USER albert identified by albert

DEFAULT TABLESPACE USERS

TEMPORARY TABLESPACE TEMP

;

GRANT connect TO albert;

GRANT resource TO albert;

GRANT DBA TO albert;

EXAMPLE 1:

Let's use a cursor to output the contents of a table to your screen.

- Lets connect as albert:

SQL> connect albert/albert

- Now albert will create a simple table:

CREATE TABLE EMPLOYEE

(

EMP_ID NUMBER,

EMP_NAME VARCHAR2(20),

SALARY NUMBER(7,2)

);

- Albert now performs a few inserts:

INSERT INTO EMPLOYEE VALUES (1,'Harry',2000);

INSERT INTO EMPLOYEE VALUES (2,'John',3150);

INSERT INTO EMPLOYEE VALUES (3,'Mary',4000);

INSERT INTO EMPLOYEE VALUES (4,'Arnold',2900);

commit;

- Now we want to output the contents of the table (ofcourse it's easier using a select statement, but we want

to demonstrate the use of a cursor).

Let's try the following code:

SQL> set serveroutput on; -- in order to make sure sqlplus shows output.

-- here is the code:

DECLARE cursor CURTEST IS

SELECT emp_id, emp_name FROM EMPLOYEE;

cur_rec curtest%rowtype;

begin

for cur_rec IN CURTEST loop

dbms_output.put_line(TO_CHAR(cur_rec.emp_id)||' '||cur_rec.emp_name);

end loop;

end;

/

The first block is the cursor declaration. You notice that "CURTEST" is declared as being the

resultset of a query?

Here we also declare the variable "cur_rec", which (when assigned values) contains a whole "row"

from the cursor. You see? This is a very easy way to assign a whole row to variable in one time!

The second block is the "body" of the code. Here we open (implicitly) the cursor "CURTEST", and define a loop

where "cur_rec" in each cycle of the loop, attains the values from the next row in "CURTEST".

What we then actually do in such a cycle in the loop, is just output two values in "cur_rec" to your screen

using the standard Oracle procedure "dbms_output.put_line()".

Did you notice that we did not explicitly "open" and later (after the loop is done), "close" the cursor?

In the "for var in cursor_name loop .. end loop" structure, the open and close of the cursor is

implicitly done.

EXAMPLE 2:

This time, we use a cursor to update a certain table, with new values and certain values from a second table.

First, albert creates the EMPBONUS table, like so:

CREATE TABLE EMPBONUS

(

EMP_ID NUMBER,

EMP_BONUS NUMBER(7,2)

);

What we want to do now, is fill the EMPBONUS table with emp_id's from EMPLOYEE,

and a calculated bonus amount which is 10% of the employee's salary.

Again, using a simple query, works much faster, but again we want to demonstrate the use of a cursor.

Let's try the following code:

SQL> set serveroutput on; -- in order to make sure sqlplus shows output.

-- here is the code:

DECLARE cursor CURTEST IS

SELECT * FROM EMPLOYEE;

cur_rec curtest%rowtype;

begin

for cur_rec IN CURTEST loop

INSERT INTO EMPBONUS

VALUES

(cur_rec.emp_id,0.1*cur_rec.salary);

commit;

end loop;

end;

/

Let's see what's in the EMPBONUS table

SQL> select * from EMPBONUS;

EMP_ID EMP_BONUS

1 200

2 315

3 400

4 290

So, it worked !

MORE ON USING CURSORS:

There are two types of cursors with respect on how the cursor is opened and closed

and whether you need to explicitly FETCH the next row from the cursor.

- The "CURSOR FOR LOOP.." structure, which we already have seen above. This one does not use FETCH

in order to fetch the next row from the cursor. This is implicitly done in the "for..loop".

Also, here you do not need to open and close the cursor.

- The "OPEN cursor, FETCH next, CLOSE cursor" structure.

The first type is really easy to use. The second type gives you a little more control in your code.

The following "cursor attributes" can be used (among others):

%notfound : did we just have fetched the last row in the cursor?

%rowcount : how many rows are already processed or done?

%isopen : is the cursor still open?

As an example of the second type, let's take a look at the next example.

We are going to use a more extended EMPLOYEE table in this example. So we could either add a column, or

just drop and re-create the new EMPLOYEE table again. Let's do the latter.

SQL> connect albert/albert

DROP TABLE EMPLOYEE;

CREATE TABLE EMPLOYEE

(

EMP_ID NUMBER,

EMP_NAME VARCHAR2(20),

SALARY NUMBER(7,2),

JOB VARCHAR2(20)

);

- Albert now performs a few inserts:

INSERT INTO EMPLOYEE VALUES (1,'Harry',2000,'CLERK');

INSERT INTO EMPLOYEE VALUES (2,'John',3150,'DIRECTOR');

INSERT INTO EMPLOYEE VALUES (3,'Mary',4000,'SCIENTIST');

INSERT INTO EMPLOYEE VALUES (4,'Arnold',2900,'CLERK');

commit;

Now, we want to produce a script that will update the SALARY column of the EMPLOYEE table,

with a percentage that depends on the JOB of the employee, that is, a "CLERCK" gets another

salary update than a "DIRECTOR".

-- Here is the code. Also note it uses a type 2 cursor.

DECLARE cursor CUR_EMP IS

SELECT * FROM employee;

emp_rec CUR_EMP%rowtype;

begin

open CUR_EMP;

loop

fetch CUR_EMP into emp_rec;

exit when CUR_EMP%notfound;

if emp_rec.job='CLERK' then emp_rec.salary:=emp_rec.salary*1.2;

elsif emp_rec.job='SCIENTIST' then emp_rec.salary:=emp_rec.salary*1.5;

elsif emp_rec.job='DIRECTOR' then emp_rec.salary:=emp_rec.salary*1.7;

end if;

update EMPLOYEE set salary=emp_rec.salary

WHERE emp_id=emp_rec.emp_id;

end loop;

commit;

close cur_emp;

end;

/

Notes:

If you want to use loops in your scripts, you might also take a look at section 22.

Here we will show some examples of the regular "FOR.." and "WHILE.." loops, which in many cases

are much easier to use.

But I just wanted to touch the subject of an explicit cursor in this document.

Also, if you must process very large tables, then a cursor may not be the most effective way to do that.

22. EXECUTING SCRIPTS FROM THE "SQL>" PROMPT:

If you are at the "SQL>" prompt, you may wonder how to execute your blocks of code, or your scripts.

There are several ways to do that.

- If you have created a procedure, function, or package:

We have not done that yet in this document. In section 26 we are going to illustrate that.

- If you have "just" a block of code (like we have seen in section 21):

A: If you have that code created in your favourite editor, then just copy it, and paste it at

the "SQL>" prompt. This really works.

B: Suppose you have created the block of code using your favourite editor. Suppose you have saved it to a file.

Then you can run it from the "SQL>" prompt using the syntax:

SQL> @path_to_the_file/file_name

Note the use of the "@" symbol here.

Example:

Suppose in "C:\test" I have the file "update_customer.sql". If I want to run it from the "SQL>" prompt,

I can use this statement:

SQL> @c:\test\update_customer.sql

If I already 'was' in the c:\test directory, I can simply use "SQL> @update_customer.sql" because the prompt

tools will per default look in the current directory for the file.

EXECUTING SCRIPTS FROM THE OS PROMPT:

In this section, we might as well give an example of how to run an Oracle sqlplus script

from the operating system prompt, or from a cron job.

Create a shell script like for example:

sqlplus /nolog << EOF

connect / as sysdba

# YOUR STATEMENTS...

exit

EOF

$ORACLE_HOME/bin/sqlplus -s "/ as sysdba" < $tmp_file 2>1

set heading off feedback off

whenever sqlerror exit

select 'DB_NAME=' || name from v\$database;

.. # possible other stuff

exit

EOF

23. USING CONTROLS, AND "FOR.." AND "WHILE.." LOOPS IN PL/SQL:

Here we will present a few representative examples of "looping" structures in PL/SQL.

23.1 USING THE "WHILE..LOOP" STRUCTURE:

Example:

I presume you are working on a testsystem, so let's work as user albert again (see section 21).

let's logon as albert and create the EMPLOYEE table:

CREATE TABLE EMPLOYEE --If that table already exists, use "drop table employee;"

(

EMP_ID NUMBER(6) NOT NULL,

EMP_NAME VARCHAR2(20) NOT NULL,

SALARY NUMBER(7,2)

);

Suppose albert wants to insert 9999 dummy records into that table, he might use the following script.

Ofcourse, it's a silly example, but it nicely demonstrates the use of a "while [condition is true] loop" construct.

declare

i number := 1;

begin

while i<10000 loop

insert into EMPLOYEE

values (i,'harry',2500);

i := i + 1;

end loop;

commit;

end;

/

The first piece of code is a "variable declaration". We want to use the number "i" in our code, that starts out with

the value "1", and increases during each cycle of the loop, until it gets to the value of "10000".

Then the loop exits because the condition "while i<10000" is no longer true.

Here is another example using "WHILE [condition is true] LOOP"

Example:

declare -- again we start with a variable declaration

x number:=5;

begin -- here the "body" starts:

while x>0 loop

dbms_output.put_line('I did this '||TO_CHAR(x)||' times.');

x:=x-1;

end loop;

end;

/

23.2 USING THE "FOR..LOOP" STRUCTURE:

You typically use the "while" loop if you have an expression that evaluates to "true" for a certain time. As long as the loop cycles,

each time the expression is evaluated again and again to see if it's true or false. If it's false, then the loop exits.

You can clearly see that in the examples above, like in "while x>0" (here the "exprsession is x>0).

But you may not even know beforehand when the expression exactly evaluates to "true" or "false".

Suppose somewhere else in your code, you have a variable "v_proceed". Inside your while loop, there can be

all sorts of statements that may affect the value of "v_proceed". At a certain cycle, it may attain the value "false".

If you created your loop like this:

while v_proceed loop

do all sorts of statements.. also statements that alter the value of v_proceed

Then the loop exits when "v_proceed" is "false".

So, typically, you use "while" when a certain expression evaluates to "true" (or "false") and you know, or

dont know, when the expression evaluates to the inverse value.

Typically, using the "FOR.. LOOP", you already know beforehand the "range" for which the loop must run.

Here is a very simple example that demonstates the "FOR.. LOOP" structure:

set serveroutput on

declare

-- nothing to declare

begin

for x in 0..4 loop

dbms_output.put_line('I did this '||TO_CHAR(x)||' times.');

end loop;

end;

/

23.3 USING THE "LOOP.. END LOOP" STRUCTURE:

Maybe the following looping control is the "King of all Oracle loops". It's very simple to use.

It always has the following structure:

loop

statements (which must be repeated)

way to evaluate if the loop must exit or not

end loop;

So, you always start simply with "loop", and you end the code with "end loop".

But, as with all loops, you need to have a way that evaluates whether the loop must exit or not.

There are a few variants here, and the most used are:

loop

statements;

if condition then exit;

end loop;

and

loop

statements;

exit when condition;

end loop;

A few examples will make it clear.

Example 1:

declare

x number:=5;

begin

loop

dbms_output.put_line('I did this '||TO_CHAR(x)||' times.');

x:=x-1;

if x=0 then exit;

end if;

end loop;

end;

/

Example 2:

declare

x number:=5;

begin

loop

dbms_output.put_line('I did this '||TO_CHAR(x)||' times.');

x:=x-1;

exit when x=0; -- here we don't have the "if" test to evaluate if x=0

end loop;

end;

/

24. HOW TO PUT SQLPLUS OUTPUT IN A SHELL VARIABLE:

Suppose you want to place some sqlplus output into a shell variable.

Here, you should think of a normal sh or ksh shell variable of UNIX or Linux.

The following example shows a script that will do just that.

Ofcourse, the method works best if only one value is returned into that variable.

Example: a sh, ksh or bash script:

login='dbuser/password@DBNAME'

code=`sqlplus -s $login << EOF

SELECT name from PERSON where id=1; # suppose we have the table PERSON, and we only want the name of id=1

exit

EOF`

echo $code # now the variable code should contain that name

25. INSTANCE STARTUP OPTIONS:

The following is important if you just want to manually startup an Instance,

and optionally open the Database.

If an Instance is down, and the database closed, then the following startup options can be used.

Here, a SQLPLUS session is assumed and all environment variable (like ORACLE_SID) are set.

SQL> startup nomount

- The instance is started and the pfile/spfile is read. So all init parameters are known

to the instance, like for example SGA parameters.

- However, the controlfile is NOT read.

- Also, all databases files stay closed.

SQL> startup mount

- The instance is started and the pfile/spfile is read. So all init parameters are known

to the instance, like for example SGA parameters.

- The controlfile is opened and read, so the instance "knows" all locations of all database files.

- But, all databases files stay closed.

Usually, this is the state is where you begin a RMAN restore/recovery session,

if for example, you have lost database files, but the controlfiles and online logs are still good.

SQL> startup

- The instance is started and the init.ora/spfile.ora is read.

- The controlfile is opened and read.

- All database files are opened, and the database is ready for use.

Sometimes you start an instance and let it read the controlfile, and then perform some