A simple note on "Scripting in Powershell"

Version : 1.1

Date : 22/04/2013

By : Albert van der Sel

It's not great, or something.... But, this note might have some value, if your knowledge of Powershell (PS)

is pretty low.

Everybody can learn it fairly quickly. If even Albert can learn it..., then you can do too... for sure !

But, the note is very simple and fairly short. That's on purpose, in order to have a quick startup.

Addition 22/01/2018: Chapter 5.

This note was created when Powershell v1/v2/v3 were current. Chapters 1-4 are still very well usable, since they

only deals with the basic-, and scripting actions, and syntax, and CmdLet's.

Chapter 5 is current, that is, also Powershell v4/v5 and modules are covered, specifically targeted for

interfacing to SQL Server. So, the example shown in 4.7, is a bit outdated.

Main Contents:

Chapter 1. Introduction: What is it?

Chapter 2. Basic scripting (to get into the "mood").

Chapter 3. A more structured approach: syntaxes, loops, tests, IO.

Chapter 4. Further examples, explained.

.............Example 4.1: delete files from a folder (and subfolders) which are older than "X" days.

.............Example 4.2: Viewing and managing a "Win2K8 / Win2K12 Failover Cluster".

.............Example 4.3: Put quotes around the records of a (flat) .csv file.

.............Example 4.4: Some AD examples.

.............Example 4.5: Loop through a text file with accounts and assign file system permissions.

.............Example 4.6: Compare txt files (.txt, .csv etc...).

.............Example 4.7: Calling a stored procedure, or starting a SQL Agent job, from Powershell.

.............Example 4.8: Finding the most recent file in some folder.

.............Example 4.9: Rename file(s).

.............Example 4.10: "Tail" or Show, the end of large txt files (like large logfiles).

Chapter 5. Powershell and SQL Server.

Chapter 1. Introduction: What is it?

Powershell just looks like a "script interpreter" and commandline interface, like a sort of evolution of the "cmd"

commandline interface, which existed (and still does) in Windows for many years. But it's a bit more than that.

Microsoft choose Powershell as the platform for scripting tasks in general, but with a strong accent on all sorts of

administrative automation like installing applications, or retrieving information of your local or remote Hosts, or interacting

with Active Directory (like automated addition/deletion/modification of accounts, or getting listings of all sorts), etc.. etc..

Very typical of Powershell, is that you use socalled "CommandLets" (CmdLets), which are very specific small pieces of code, each designed

for specific tasks. You probably need to get used to it, but very typical for working with PS is, is that you place the CmdLets in series, whereas

the output of a former CmdLet, will be the input for the following CmdLet.

Here is a very simple example:

From the old fashioned commandprompt (cmd), type "powershell" and press Enter.

Note: often it is neccessary to start the prompt with "Administrative privileges" (RunAs), and later more on this.

C:\> powershell

PS C:\>

The powershell prompt, denoted by something like "PS C:\>" will come up. Now just type the following code behind the PS prompt:

PS C:\> Get-Service | Where-Object {$_.status -eq "running"}

It prints a screen of which background processes (services) are running on your machine.

Here, you have used two CmdLets: "Get-Service" and "Where-Object". The "Get-Service" CmdLet just retrieves a list of all services

which are installed on your system. That's simply its task. Normally, it would just dump that output to your screen.

However, by using the "|" (pipeline) symbol, we redirected the output from "Get-Service", to the next CmdLet,

namely "Where-Object". So, instead of dumping the records of the output to the screen, the "Where-Object" will receive them

as input.

As "Where-Object" gets record by record from "Get-Service", you need to tell it what to do with it. That's determined within the "{ }"symbols,

because here you see the "condition" that tells "Where-Object" what to do:

It must filter the output of "Get-Service" to show only the running services, and not all installed services (of which

many maybe not running). Thats actually the condition "status equals running", or in code: {$_.status -eq "running"}.

Now you may still wonder about the "$_" symbol. Indeed, often you do not need need to declare variables beforehand, and some are

just already "implicitly there" so to speak. "$_" is a standard variable, acting as a container for records destined

to, or from, CmdLet's.

It means this:

We have setup a "pipeline" from the "Get-Service" CmdLet, to the "Where-Object" CmdLet.

So, data "streams" from "Get-Service" to "Where-Object"CmdLet. Every record transferred, is contained in the "$_" variable,

which is always how it works in Powershell, when two CmdLets are connected by a pipe.

Now, if you would zoom in into one such a record, it usually has multiple properties (values), and it this specific case,

we have a "status" property, which may have either of two values: "running" and "not running".

Powershell is also "Object Oriented", so an "object" as a record in $_, may have different properties.

In the example above, $_.status is such a propery, which may have a certain value (like "running").

So, it makes sense to have as a condition for the "Where-Object" CmdLet, the expression {$_.status -eq "running"}.

⇒ How to run code:

There are two main ways to run code.

- Just like above, you simply enter it interactively at the PS prompt. When you are ready typing, you can run it immediately.

- Or, you place code in a file (scriptfile), and then you have the code stored, and you can run it anytime you want,

or you can even schedule it to run for specific date/times.

⇒ How to create code (in scriptfiles):

There are at least two ways to create powershell code:

- Simply use notepad or any other (flat file) editor, and save the file in a suitable folder.

Then, from the PS prompt you can easily call it like for example "PS C:\TESTSCRIPTS> .\myscript.ps1".

Here, the file "myscript.ps1" contains the PS code. Dont worry for now how this works exactly. We will see many examples below.

- Instead of simply using an editor, since Powershell v. 2, we have the "Integrated Scripting Environment" (ISE) available

which is a sort of graphical user interface interface, like a "workbench" really, to create scripts.

Actually, it doesn't matter too much what you choose. In this note, we simply use an editor to make scripts.

And, whatever you would have choosen, you need to type code anyway. But, ISE has indeed a few smart features.

⇒ A tiny bit more on the architecture:

If you don't care too much about the architecture, you can skip this section and go to Chapter 2.

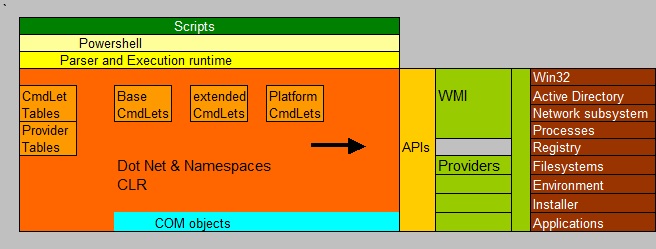

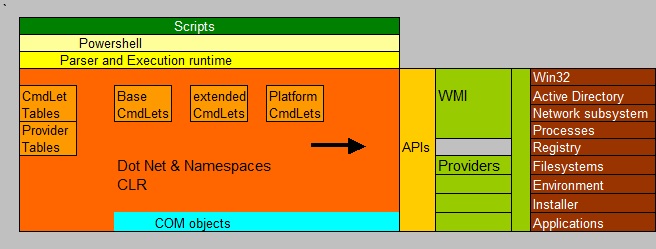

Figure 1 below, is a simple "sketch" of the general architecture. Powershell CmdLets are actually dot Net code.

Dot Net is Microsoft's modern architecture for developing, deploying, and running applications.

With modern Windows versions, you get a set of "base" CmdLets. However, whenever you install a business application

like SQL Server, Exchange (and many more), you get additional specialized CmdLets that let you interact with those applications.

Fig 1. Simplified architecture Powershell.

Your CmdLets, usually uses "providers" and api's to access various parts of the Windows Operating System, like the Registry, AD etc..

Traditionally, the inteface to "WMI" (Windows Management Intrumentation) is much used. For example, using the "Get-WMIobject" CmdLet,

it's possible to retrieve information from almost everything of your system (like disks, printers, cpu, memory, processes etc..).

That's why I printed WMI "very fat" in the figure above, to underline it's importance.

However, Powershell is not limited to the Windows Operating system. If the right CmdLets and providers are installed, you can

access other platforms too.

Alongside the "base set" of CmdLets, delivered with your system, many specialized modules (containing providers and CmdLets)

came available the past few years. For example, easy to use CmdLets to access Active Directory, and to access all sorts of applications etc..

This "storm" is far from settling down. You will see: CmdLets and providers will come out that will let you access virtually everything.

That's why investing time and effort in studying Powershell is one of the best things you can do right now.

Chapter 2. Basic scripting (loops, file modifications etc..).

2.1. A few preliminaries.

In this chapter, we will create various scripts. Ofcourse, we will save them as files in some folder.

So, I suggest that you create a folder, called something like "C:\TEST" (or another name), and store your files there.

But we will also just type in code "interactively", as in example 1 below.

In creating scripts, we are going to use an 'flat file' editor, like notepad. I'am perfectly happy using notepad, but I must

say that are some free editors around with extended capabilities. Just google on "free Powershell editor". Maybe you like one of them.

But, since I only type in some short code pieces, notepad will do fine for me.

Before we do anything at all, here are some preliminaries for writing scripts. Just take notice of them.

⇒ Powershell file extension must be ".ps1":

When you create scripts, be sure to name the file with the ".ps1" file extension.

So, suppose you create a script that installs a certain printer, you might consider to name it something like "installprinter".

But, take notion of the fact that the file extension must be ".ps1", so, in the example above,

you should save the file as "installprinter.ps1".

⇒ If your scripts won't run:

It's quite possible that the execution of saved scripts is disabled on your system as a result of a security policy.

Indeed, for Servers in a business environment, one should carefully consider the pro's and contra's of this measure.

You can enable the execution of scripts using the "Set-ExecutionPolicy" CmdLet.

Then use one of the following options for this CmdLet: Unrestricted, RemoteSigned, AllSigned, Restricted.

If you use "Restricted", the execution of scripts is totally "off".

So, for systems in a business environment, you should carefully consider what is the best for you.

Here, I suppose you use a personal test system, so let's go "unrestricted":

PS C:\test> Set-ExecutionPolicy -executionPolicy Unrestricted

Also, on Vista, Win2K8 etc.. If you start Powershell from the commandline (cmd), you might consider to start the command prompt

first with "elevated privileges" (Run as Administrator) and next start Powershell. Otherwise, you may run into permission issues.

In some situations, you do not want the "elevated privileges", but in others (like installing/modifying stuff)

you probably do need them.

2.2. A few examples of using Powershell for file operations.

With Powershell, you can perform all sorts of file manipulations.

Using a few examples, allows me to demonstrate a few important CmdLets, and how code "looks like".

In many businesses, people are searching for the best solution for file manipulation. To illustrate this, suppose the organisation

uses a database where ascii files must be imported into, at a regular basis. Often, such a file does not "fit" in the database

in it's current layout. For example, it has one column too many, or one field must be modified, before it can be imported cleanly.

Example 1: cutting one column off the content of a file

Picture a scenario like this. Your boss runs in your office, waving with a USB stick and yelling: "This ascii file has one column too many".

But it's so large, I cannot use Excel or something.... Its too darn large!! I suppose you have some clever script dont you....??"

While copying the "customers.txt" file from the stick to the "c:\test" directory on your local harddrive, you start thinking.

Lets mimic this situation. Create a flatfile called "customers.txt", with some fields, all seperated by just one "whitespace", like so:

1 ABC Amsterdam

2 Goofy Washington

3 Ghostbusters Denver

The "Get-Content" CmdLet could be interresting here. You can use it to spool the content of a flat file to the screen,

or even redirect it to another CmdLet.

Lets view the content of the file using "Get-Content" first:

PS C:\test> Get-Content c:\test\customers.txt

1 ABC Amsterdam

2 Goofy Washington

3 Ghostbusters Denver

Now, lets try to get the third column off. Here is a piece of code which achieves that:

PS C:\test> Get-Content customers.txt | %{$data=$_.split(" "); Write-Output "$($data[0]) $($data[1])"}

1 ABC

2 Goofy

3 Ghostbusters

Now, that line might look "impressive", bit it's really not difficult, once you are acustomed to Powershell style.

I think it's important that you know, that the "exact" details of the syntax are not important right now: I would like you to understand

the "general idea" only. Only after chapters 1,2 and 3 are "consumed", you should have an idea about the right syntax.

Let's see what the upper command does.

First, we have "Get-Content customers.txt | " part, which opens the file, and then it wants to push every record it reads

to whatever follows after the "|" pipe symbol.

Now actually, the stream of data goes to the CmdLet "Write-Output", which seems to print only two variables, namely

$data[0], and $data[1].

But before the "Write-Output" CmdLet comes in action, you will notice the variable assignment "$data=$_.split(" ");".

As we know from above, every record pushed by "Get-Content", is contained in the "$_" variable.

So, as the first line is read by "Get-Content", the "$_" will contain:

1 ABC Amsterdam

But $data is defined as "$data=$_.split(" ");", where the split method is called from the object "$_".

Now as you may know from modern Object Oriented Languages (OO), an "object" might contain data, as well as call-able

functions. Here, "$_" exposes indeed an number of function, among "split()" is one of them.

Now, let's quickly see what split() does. Take a look at the following intermezzo:

Take a look at this code:

First, we declare a variable $string = "fries hamburger milkshake" to be one contiguous string. Then we call the

split() method, to split up this string in independent pieces. Note that the argument to the split function is " "

which tells split(), to split on the "whitespaces".

PS C:\TEST> $string = "fries hamburger milkshake"

PS C:\TEST> $string.split(" ")

fries

hamburger

milkshake

Once a variable is of "string" type, you automatically have the ".split" method (function) available.

So, what "$_" contains, will be split in independent piecies, and placed into the $data variable.

Now, $data is automatically an "array of values", because of the intrinsic power of Powershell.

Powershell "knows" that if any variable contains independent pieces, it treats it as an array of values.

So, you might say that $data can be seen as $data[0], $data[1], and $data[2] (counting from 0).

Thus, for the first record read and pushed by "Get-Content", we have:

$data[0]=1, $data[1]=ABC, and $data[2]=Amsterdam

Lastly, "Write-Output" will only print $data[0] and $data[1].

This process is continued for the second record read by "Get-Content", and the third etc.. all the way down to the last record.

The result is that we have cut off the third column. However, the result is printed on your screen.

Note:

Sometimes a command entered at the Powershell prompt, is a bit "long". You can always press enter right after a pipe symbol,

since Powershell then knows that you want to enter more text.

Powershell then displays the ">>" symbols which denotes that you can enter more text.

So, then it looks like this:

PS C:\test>

PS C:\test> Get-Content customers.txt |

>> %{$data=$_.split(" "); Write-Output "$($data[0]) $($data[1])"}

>>

1 ABC

2 Goofy

3 Ghostbusters

Example 2: cutting one column off the content of a file, and save it to another file

Basically, it's the same as example 1 above. So for explanations, see example 1.

As you can see in the code below, this time we use a third CmdLet, namely "Out-File", which takes any stream it gets left from the pipe,

and writes that to a file of your choice, in this case "customers2.txt".

PS C:\test> Get-Content customers.txt |

>> %{$data=$_.split(" "); Write-Output "$($data[0]) $($data[1])"} | Out-File customers2.txt

>>

PS C:\test>

If you open customer2.txt with notepad, you will see that only two columns of data is present.

Example 3: cutting one column off the content of a file, using a script, with a parameter

What we have done above, we are now going to place in a real script. Ofcourse, the script must be setup in such a way so that

it can be called with an argument. This argument is then any txt file, from which we only want the first two

columns saved in another file.

So, in the "C:\TEST" directory, start notepad and create a file called "test1.ps1".

Place the following code in "test1.ps1":

Get-Content $args |

%{$data=$_.split(" "); Write-Output "$($data[0]) $($data[1])"} | out-file newfile.txt

Now, let's run it:

PS C:\test> .\test1.ps1 customers.txt

You should notice two things here:

(1):

Do you see that I called the script, with a parameter (or argument), which is the name of the file from whose content

we want only want two columns stored in another file.

Do you also see the special variable "$args" directly behind "Get-Content"?

Using a script with a simple parameter is really easily done, since the $args automatically takes on any value

of the argument passed to the script.

(2):

Starting a script from the Powershell prompt is really done in this way: ".\scriptname.ps1".

You might wonder where this strange looking syntax is coming from.

A "." ( a dot) means the "current directory", which in this case is "c:\test". Now, since we need to add a slash "\" to get a full path

to the file, we have string like ".\scriptname.ps1" which then resolves to "c:\test\scriptname.ps1".

2.3. A few examples of using Loops.

Let's review a few examples that use a loop. In this section, we will illustrate the "foreach" loop.

You can find more loop constructs, like the "while" loop, in Chapter 3.

Example 4:

Just type in the following interactively at the PS prompt:

PS C:\TEMP> $nodelist=("Harry","Nancy") -- press enter

PS C:\TEMP> foreach ($file in $nodelist) -- press enter. The prompt changes to ">>"

>> {

>> write-output "hello $file"

>> }

>>

hello Harry

hello Nancy

This one should be easy to follow. First, we have a variable "$nodelist" containing the values ("Harry","Nancy").

Did you noticed that I did not declare that variable at all? I did an value assignment right away, and Powershell automatically

declares the variable for me. Saves time and coding.

Then we have the heart of the loop using the "foreach" statement.

Foreach uses a "condition" that, if it evaluates to "true" (or if still values present), it goes for another swing. Otherwise, it stops.

In this example, it means that "as long as there are values in $nodelist", perform the statements between the "{" and "}".

Example 5:

In this example, we use a script to start (or stop) a set of related services of some kind.

In this example, a take Oracle services on a Windows system, where the display name of such a service starts with "Oracle"

like for example the service "OracleServiceTESTDB11g"

Now, for your system, if you don't have Oracle installed, maybe you can think of another set of services to use.

Or, you can just read this example and not do it "hands-on". The primary object is that you understand the loop that is used here.

You don't have to "perse" perform each example on your system.

So, lets create a script.

Start notepad (or any other editor) and type the following code:

foreach ($svc in Get-Service){

if(($svc.displayname.StartsWith("Oracle")) -AND ($svc.Status -eq "Stopped")) {

echo $svc.DisplayName

Start-Service $svc.name

}

Save the script with the name "oraclestart.ps1" in the "c:\test" folder.

You can run the script, from the PS prompt, like so:

PS C:\TEST> .\oraclestart.ps1

It should not be too difficult to follow this code.

First, we have a "foreach" loop. The condition of this loop is a list of all "services", that "Get-Service"

has produced as a list. So, actually, all code in the "if" statement is repeated for all services.

But only where the "service display name" starts with "Oracle" and where this service has the status "stopped", then

the code between "{" and "}" is executed. The result is that all stopped Oracle services are started.

If you can't actually do this example, don't worry. The main goal is to get a grip of Powershell, and not on a particular script example.

Example 6: Rename a set of files: using a "sort of loop" (list), but its not an explicit loop.

This is a bit of a "weird" example. Here I use Powershell to simply rename a bunch of *.txt files to *.bak files.

Now, you might be tempted to use "foreach" again. That would be very reasonable, since "foreach" can be used for setting up loop.

Reasoning along these lines, you might first try to simply list the *.txt files, and if that works, you might think

that there must surely exist a CmdLet that lets you rename an item (indeed, there is one, namely "Rename-Item").

So, suppose you have a few .txt files in "c:\test", then lets try a simple listing first:

PS C:\test> $file=ls *.txt

PS C:\test> foreach ($a in $file) {

>> write-output $a

>> }

>>

Yes, this produces a listing of all *.txt files. So, if between the "{" and "}" a Write-Output is possible, then some

CmdLet that renames stuff must be possible too.

This is an example where you might easily going to follow a path that's not wrong, but maybe somewhat slightly cumbersome.

What we want can be done in a much more efficient way, if only we knew of a CmdLet that produces a list of the right set of files,

which we immediately can pipe through to the "Rename-Item" CmdLet.

For this, the "Get-ChildItem" would work perfectly. Just take a look a this working code:

PS C:\TEST> Get-ChildItem *.txt | Rename-Item -NewName { $_.name -replace 'txt', 'bak'}

The "Get-ChildItem" CmdLet, resembles the old DOS "dir" command. You can get a full listing of all files, or just a "filtered" listing,

like a list of *.txt files, or like a list of files that have a name which begins with "book*" etc.. etc..

Now, its actually the same story again: Get-ChildItem produces a listing, a recordset as it were, where each record is piped

to "Rename-Item". Then "Rename-Item" does the actual renaming of each filename that it receives from Get-ChildItem.

Ofcourse, "$_" is used again, where the "$_.name" is actually the property to give another value.

It's not an explicit "loop" we see here. But, still, we have a piece of code that just works through a list, namely the list

that "Get-ChildItem" produces.

This CmdLet is worth while to remember. It comes in handy anytime you want to do something with a list of files.

Question:

Instead of:

PS C:\TEST> Get-ChildItem *.txt | Rename-Item -NewName { $_.name -replace 'txt', 'bak'}

Could we also do simply this?:

PS C:\TEST> ls *.txt | Rename-Item -NewName { $_.name -replace 'txt', 'bak'}

Example 7: Nesting

More information on the usage of the curly brackets "{ }" and parenthesis "( )" can be found in the next chapter.

However, it's good to show in this section, how you should use "nested" statements. A simple example can illustrate this.

Suppose in some directory, you have files with names like *aa*.txt, *bb*.txt, and many other names.

Suppose you only want to select the *aa* files, and perform some operation on them.

Then, you could

- first create a collection (or list) to contain all files.

- then, using "foreach", loop through the list.

- then, use an "if" test, to test if a member is an "*aa*" file.

- then, perform whatever operation.

$filelist=ls *.txt

foreach ($myvar in $filelist)

{

if ($myvar.name -like "*aa*")

{

write-output $myvar.name

}

}

Ofcourse, the above example can be simplyfied by just creating a collection like "$filelist=ls *aa*.txt

However, the purpose is to show you the "nesting", and the use of "{ }" enclosing various "levels" of statements.

Chapter 3. A more structured approach.

Let's take a more structured approach now. Now, its my experience that a very structured doc is.., well.., sometimes a bit dull.

But the author here is a certain Albert. So, very structured stuff is not to be expected. ;-)

3.1. Powershell variables.

As we have seen before, a variable does not need to be declared before you can use it. You can immediately do an assignment

of a variablename, to a value.

For example (as seen before):

PS C:\TEST> $string = "fries hamburger milkshake"

PS C:\TEST> $string.split(" ")

fries

hamburger

milkshake

Here, "$string" is a variable, which seems to hold a "string-like" value. Well, it's string alright, because the value is enclosed by "".

Ofcourse, "numbers" can be placed in a variable too. Try this:

PS C:\TEST> $var1=5

PS C:\TEST> $var2=2

PS C:\TEST> $var3=$var1 + $var2

PS C:\TEST> Write-Output $var3

7

Yes, 5+2=7, so variables holding numbers works too. Also, if such a simple calculation works, then it's immediately clear that also quite complicated

arithmetic will work too in a similar way.

Note that I used "Write-Output". We have seen "Write-Output" already before in a former example. But with a variable, you don't need to use that CmdLet.

Just entering the variable name from the PS prompt will show it's value.

I did not use the "$" character in the variable names without a reason. In Powershell it's simply required.

So all your variables must start with a "$" in their names.

Typing:

It's interesting to see what happes in above calculation, if you would try $var1=5 and $var2="2"

That is: $var1 is obviously a number, but $var2 seems to be a string because it's enclosed by "".

As you will see, the calculation still works like clockwork.

So, Powershell does not place "severe restrictions" on types beforehand. No "strong typing" here. I think it's swell for a scripting language.

However, if needed, you can try (a bit of) "strong typing", like telling Powershell beforehand what the datatype is. Like so:

PS C:\TEST> [int] $var1=10

PS C:\TEST> [int] $var2=2

PS C:\TEST> $var3=$var1 * $var2

PS C:\TEST> $var3

20

Special Variables:

"$_":

Remember the "$_" variable? Its always the variable acting as a placeholder for data when CmdLet1 "pipes" the records

to CmdLet2. So, CmdLet2 can then immediately work with the "$_" variable.

"$args":

When you create a script that expects one or more arguments (parameters), then $args in the script will be the holder of those argument(s).

See example 3 in chapter 2 for an example.

There are a few more of those "special" variables. These are not too spectacular.

Arrays:

An "array" is a sort of "multi-value" variable. So, it stores multiple values (also called "elements"). Now, to address one particular value

in the array, you can use an "index". Just like the homes in one street, you can distinguish between those homes,

since they all simply have a unique number. In an array, the "index" starts from 0.

Remember example 1 in chapter 2? Here we had a variable $data, which contains three elements. In this example, "Write-Output"

only printed the values $data[0] and $data[1]. It did not printed $data[2].

It might be instructive to take a look at example 1 again.

To use an array, you can simply assign a "multi-value" range to a variable like in these examples:

PS C:\TEST> $myArray1 = 1,2,3,4,5

PS C:\TEST> $myArray2 = "oracleSRV1", "oracleSRV2", "oracleSRV3"

PS C:\TEST> Write-Output $myArray2[1]

oracleSRV2

Task:

This note is no more than just a simple intro. Once you have obtained some grip in Powershell,

find out what you can do with the "hash table" variable.

3.2. More on Loops.

The "foreach" loop:

In chapter 2, we have seen a few examples of the "foreach" statement, like for example:

PS C:\test> $file=ls *.txt

PS C:\test> foreach ($myvar in $file)

>> {

>> Some code...

>> }

>>

Actually, the "foreach" statement is great for working through some list, or collection, just like the example above,

where "ls *.txt" produces a list of .txt files.

The "Foreach-Object" loop:

Just as above, "Foreach-Object" CmdLet is great for working through some list, or collection.

Now, "foreach" is a command in an expression, while "Foreach-Object" is a CmdLet.

So, whenever jou have a CmdLet that "pipes" records to a next CmdLet for processing that record collection, you should use

the "Foreach-Object" CmdLet. So, "Foreach-Object" takes its records from the pipeline.

The "Foreach" statement, does not need a pipeline. It just takes a list of values (like names of files etc..)

As an example of using the "Foreach-Object" CmdLet, take a look at this example:

PS C:\test> Get-WmiObject Win32_Printer | ForEach-Object {$_.Name}

The "Get-WmiObject" CmdLet pipes records from the "Win32_Printer" class, to the "Foreach-Object" CmdLet.

So, its a clear example how the "Foreach-Object" CmdLet gets it's "input".

But there are more loop constructs in Powershell. Sometimes, you want to work with a "counter" that increases (or decreases)

with each iteration, until this counter reaches a "final value" (like 0, or 1000 etc..).

For this type of true loops, the "while" loop can be used.

The "while" loop, or equivalently, the "do while" loop:

The "while" loop (or "do while" loop) is very usefull when you want some processing done "while a certain condition remains true".

It's defined as follows:

while (condition true)

{

statement(s)

}

or stated a bit differently:

do {statements}

while (condition true)

Both forms are equivalent. To illustrate it, try this example. As the condition I have used "while (1 -le 2)",

meaning: "while (1 is lower than 2) do statements"

This condition is always true! So the statements will be repeated forever (until you use Ctrl-C).

PS C:\TEMP> while (1 -le 2) {write-output "hello"}

You see? It's a silly example. The condition is such, that never an "untrue" value will be reached.

A better example would be this:

PS C:\TEST> $i=1

PS C:\TEST> while ($i -le 5) {$i=$i+1; write-output "Hi, here is $i"}

Hi, here is 2

Hi, here is 3

Hi, here is 4

Hi, here is 5

Hi, here is 6

Here is use a counter, $i, which initially is set to "1".

Then the condition follows, which in English is: do while $i is lower than or equal to 5, the following statement(s).

In the statement block, I first increase the counter by 1. Then follows a print statement.

Question: Can you explain why the output shows a $i=2 first, and stops at $i=6 ?

Many years ago, like in the old fashioned "C" programming age, a much better way was developed to describe the increase

of such a counter, as "$i" in the example above. Once you have seen it, you will never ever use the "boring" $i=$i+1;

increment again!

Take a look at this compact way to code:

PS C:\TEST> $i=1

PS C:\TEST> while ($i -le 5) {write-output "Hi, here is $i";$i++}

Hi, here is 1

Hi, here is 2

Hi, here is 3

Hi, here is 4

Hi, here is 5

You see that? The "$i++" is the same as the "boring" "$i=$i+1", but it has certainly some more "bling" and style.

By the way: professional Powershell scripts will always use that "style", since thats the way to to program increments since ages.

Question: Can you explain why the output shows a $i=1 first, and now stops at $i=5 ?

Sometimes you do not want an increment of $i by "1", using $i++ but an increment by any integer like "3" or "5" or "100" etc...

For this, you can use the "$+=increment" notation. An example might make this clear:

PS C:\TEST> $i=5

PS C:\TEST> while ($i -le 26) { $i; $i+=5}

5

10

15

20

25

So keep the usages of "$i++" and "$i+=value" in mind. At many times, they are incredably usefull.

3.3. More on CmdLets.

Up to now, we have only seen the following CmdLets:

Get-Service

Where-Object

Set-ExecutionPolicy

Get-Content

Write-Output

Write-Host

Get-WmiObject

Get-ChildItem

Foreach-Object

But we are going to meet quite a few more in the text below.

How can we get a list of availble CmdLets? To get a list of CmdLet's which are available in your session,

you can use the "Get-Command" CmdLet.

PS C:\TEST> Get-Command

This produces a large list of CmdLets, with a possible "alias" (or shortname) to that CmdLet.

The listing will rush by on your screen. If you want to see it "one screen at the time", use "Get-Command | more".

Anyway, it's an impressive list.

A CmdLet is "dot Net" code. It's not like a traditional ".exe", that you can find in some folder on disk.

Instead, dot Net "classes" were created in a hierarchical "namespace", which naming structure "resembles"

a sort of folder structure.

Objects can be "intstantiated", meaning that they get executed in the supporting dot Net runtime environment.

The classes are the blueprints, for the objects that "live" in the dot Net "machine".

Often, CmdLets are implemented in "assembly files" which really can be found on disk. The filenames of such files

often have a resemblence to the part of the namespace they belong too. For example, you might find files like

"Microsoft.PowerShell.Management.dll" or "Microsoft.WSMan.Management.resources.dll" etc.., if you would search for it.

It's also possible to "load" additional CmdLets in your session from "some" assembly module.

What often happens too, is that when you install additional features or roles on a Windows Server system,

that then also additional Powershell modules get installed on your system, often in the form of assemblies.

Importing additional functionality: "Add-PsSnapIn" and "Import-Module":

Before you start to work on some project, are you sure you have the right CmdLets for the job?

You know, with the base set of CmdLets, you can create perfect scripts for a almost unlimited variety of tasks.

However, sets of CmdLets can be "imported" to your session, and it could well be that those CmdLets are a great help

for certain tasks, like managing Internet Information Server, managing SQL Server, Sharepoint, Clusters, VMWare etc.. etc..

In Powershell version 1, you could load a socalled "Snapin" which was the source for additional CmdLets.

In version 2 and higher, you can now load a socalled "Module", which does not need registering, and is easier to use.

But, also in v.2 you can load a Snapin, if required.

There are two CmdLets that let you import a Snapin or a Module (see below): Add-PsSnapIn and Import-Module.

For example, PowerShell snapins are binaries (.dll) that implement cmdlets and providers. You must install the snapin

and then add the cmdlets in the snapin to your session using the Add-PSSnapin cmdlet.

Here are two examples:

Powershell v1 style (after installing the snapin). You might have to use Set-Location "path" first.

PS C:\TEST> Add-PSSnapin Microsoft.SharePoint.PowerShell

Powershell v2 style:

PS C:\TEST> Get-Module -ListAvailable

produces a list of available modules...

PS C:\TEST> Import-Module -Name ModuleName

Since CmdLets are perfectly designed for "passing objects through a pipe", the following statement would not be very surprising:

PS C:\TEST> Get-Module -ListAvailable | Import-Module

As an example, if you would load the "Active Directory" module, many new CmdLets are available which are specialized for

AD operations. This means that with simple short statements or scripts, you can add or list accounts, computers etc.. etc..

Truly amazing stuff, really.

You would load the AD module, using:

PS C:\> Import-Module ActiveDirectory

On a Win2K8 DC, the module is available right away. From a Win7 workstation, you can load the module,

but additional steps need to be taken. This is not the subject of this introduction.

If you would want a list of AD useraccounts, using VB, it would be a relatively lengthy story (well, not really that much).

Not with PowerShell. On a DC, try this:

PS C:\TEST> Get-Aduser –filter * | export-csv "listing.csv"

The "Active Directory Module for Windows PowerShell", offers at least 90 (or so) new CmdLets for full

AD management, like for example "New-ADUser", "New-ADComputer".

The upper information is truly "minimal". The only effect can be, that at least you are aware that there exists options

to load additional functionality.

3.4. Functions.

A "function" is nothing else than a block of statements, identified under one "name".

Usually, a function takes one or more arguments, but that's not a requirement.

It's really that simple. So, the "simplest of simplest" of all functions, could be this one:

PS C:\TEST> function myfunction

>> {

>> write-host "Hi there!"

>> }

>>

PS C:\TEST> myfunction #call the function

Hi there!

Obviously, this one is not so usefull, but it's still a fuction allright.

- One obvious advantage of a function is, is that you can call it more than once in a script.

- Another advantage is, is that you can create a function to do specific things, and once it works,

you can just almost blindly re-use it again in other code.

- If you have a large script, the use of a function makes it easier to read, instead of repeating the code over and over again.

Using parameters (or arguments) can be done in several ways.

One way is to define the function with parameters directly. Here is a simple example:

PS C:\TEST> function findfile ($p, $f)

>> {

>> Get-Childitem $p -Recurse | Where {$_.Name -eq "$f"}

>> }

>>

PS C:\TEST> findfile "c:\" "oops.txt"

Mode..........LastWriteTime......Length...Name

-a--..........06.01.2013 19:26...5........oops.txt

Ok, maybe not the greatest example of all, but it should be easy to follow. Here, I created a function that finds a file.

It uses as arguments the "starting path" (like "C:\") and the "file name" (like "oops.txt").

This example relies heavily on the "Get-Childitem" CmdLet. This is an important CmdLet indeed.

We have seen it before in example 6. In short, a folder can be the "parent" of subfolders and files.

So, if we use a CmdLet which essentially says "get all childs within $p" with the condition "where the childname must be $f",

then it indeed returns the name of such a file, if it exists.

The above really is just minimal information. There is much more to learn on functions.

3.5. A few notes on when to use curly brackets "{ }" and parenthesis "( )".

In the scripts sofar, we have seen the use of curly brackets "{ }" and parenthesis "( )" in various "places".

But there has to exist certain rules for this. That's indeed true.

Sometimes it "feels" quite intuitive, the more so if you already have use other scripting/programming languages.

For example a "statement block" always goes between curly brackets "{ }". For example:

$filelist=ls *.txt

foreach ($a in $filelist)

{

write-output $a

}

Although the "statement block" here, is just one statement, the "write-output $a" sits between the "{ }"

The simple example above, also shows a clear example when using parenthesis "( )".

The "foreach" loop must process a list, and this list is a sort of "control structure" for the loop on where to start,

and when to end the loop. In this case, the controlstructure is just a list of all .txt files.

But this is typical for loop structures. Anytime a list or expression is used to control a loop structure, it'sits between "( )".

Here, take a look at this while loop:

$i=1

while ($i -le 5) {write-output "Hi, here is $i";$i++}

But there are occasions where the use of curly brackets "{ }" and parenthesis "( )" might not be so obvious.

For example, when you have a CmdLet that pipes to another CmdLet, where the second CmdLet must evaluate a condition or expression,

then curly brackets "{ }" are used.

Here are a few examples:

=> For each device in the printer class, only show it's name:

Get-WmiObject Win32_Printer | ForEach-Object {$_.Name}

=> Only print the properties for this one printer, called "Fax":

Get-Wmiobject Win32_printer | where {$_.name -eq "Fax"}

=> Again an example where a CmdLet (Get-Childitem) pushes records to "Where" (an alias to Where-Object), which must evaluate something.

function findfile ($p, $f)

{

Get-Childitem $p -Recurse | Where {$_.Name -eq "$f"}

}

Whe have seen the use of square brackets "[ ]" too, in a few occasions. They are often used to denote array members.

3.6. WMI.

WMI or "Windows Management Instrumentation", abstracts computer resources (like disks, memory, netcards etc..), and

anything else for which a socalled "WMI providers" exists.

For example, if there should exists a "provider" to see "scheduled tasks", then this resource is viewable from the WMI api too.

So, it's not only hardware resources that are covered by WMI, but applications and Operating System resources as well.

WMI is Microsoft's implementation of the general "CIM" standard ("Common Information Model").

In WMI language, the stuff that is managed and viewable, are the "Objects", which (as usual) are organized "in" "Classes".

The "script" or "programming language" that wants to access the managed objects, are often called "consumers".

These can access managed objects through the use of the right "providers".

The WMI infrastructure is fairly complex, and is partly also "COM" based. For this note, we do not need to know all of the details.

Important is, that Powershell CmdLets are available to query the WMI repository, which can obtain lots of systemdata.

The WMI repository uses hierarchical namespaces. Every set of objects which are related, gets their own "namespace".

Each namespace has a sort of "root". The default "root", if Powershell accesses WMI, is "root\cimv2", which is a collection

of classes which represents your computer system as a whole. However, usually other namespaces exists on your system as well,

like for example related to "applications", or a Directory Service etc..

The most important CmdLet is "Get-WmiObject", which can query namespaces, and all classes within a namespace (which is a class itself as well).

Let's try this:

PS C:\TEST> Get-WmiObject -namespace "root\cimv2" -List

This should return an impressive list of classes which you can query further.

Since the default namespace is "root\cimv2", you do not need to put that as a parameter if you want to get information

of the classes shown in the above listing. You can just query the classes directly. Here are a few examples:

PS C:\TEST> Get-WmiObject -Class win32_LogicalDisk

PS C:\TEST> Get-Wmiobject -Class win32_OperatingSystem

PS C:\TEST> Get-WmiObject -Class Win32_NetworkAdapter

PS C:\TEST> Get-WmiObject -Class Win32_Printer

Since an Object (like a printer) might have many "properies" (each with a value), you might be interrested in one property only.

In such a case, for printer objects, you can pass the records through to (for example) the "ForEach-Object" CmdLet,

and let it only print the "Name" (of each printer).

Remember: Every record transferred by "Get-WmiObject", is contained in the "$_" variable, which is always how it works

when two CmdLets are connected by a pipe. So, if you only want the printername, then get the "$_.name" property for each printer in the list.

PS C:\test> Get-WmiObject Win32_Printer | ForEach-Object {$_.Name}

Chapter 4. Some Further examples.

In time, for further "inspiration", I will add a couple of more scripts here, with appropriate comments.

For now, I think that already a few nice examples are listed. You can try them, or just simply browse along the code.

If you would agree (understand) the logic and syntax..., that only would already be fantastic !

Example 4.1: delete files from a folder (and subfolders) which are older than "X" days.

The problem can be defined as follows:

Many applications create logfiles. But after weeks, or months, those logfiles piles up, and take too much diskspace.

Maybe you only want to have the last 7 days, or 30 days (or so), online on disk.

So, older logfiles can be deleted. The following PS script does that.

You can schedule such a script, for example, to run once a day, once a week etc..

$Today = Get-Date

$NumberOfDays = "7"

$StartingFolder = "C:\temp"

$Extension = "*.log"

$LastWrite = $Today.AddDays(-$NumberOfDays)

# Body of code:

$FilesCollection = Get-Childitem $StartingFolder -Include $Extension -Recurse | Where {$_.LastWriteTime -le "$LastWrite"}

foreach ($File in $FilesCollection)

{

Remove-Item $File.FullName | out-null

}

Now, let's walk through this script.

You see that first a few variables are set. You know that any variable starts with a "$" symbol.

The variable $Today equals the CmdLet "Get-Date", which, when called, retrieves the current date/time.

Then a few fixed variables are set, like "$StartingFolder", which I placed here at "C:\TEMP".

The determination of "$LastWrite" deserves some explanation. You know that the plan is to remove all files

which are older that 7 days. To get at that point, we need a calculation later on, where the "date of 7 days ago"

must be known. Then we can investigate the "last write time" of any file, and compare it to the "date of 7 days ago".

You can try the following statements (or just read them...)

PS C:\TEST> $Today=Get-Date

PS C:\TEST> write-host $Today # lets see what we have

05.01.2013 13:25:02

PS C:\TEST> $NumberOfDays="7"

PS C:\TEST> $LastWrite = $Today.AddDays(-$NumberOfDays)

PS C:\TEST> write-host $LastWrite

29.12.2012 13:25:02

Yes, "$LastWrite" contains the "date", from 7 days ago.

Next, with "$FilesCollection", we create a collection (or list) of files, in all subfolders in C:\TEMP,

which are older than 7 days. Notice the use of the "Get-Childitem" CmdLet.

We have seen "Get-Childitem" before in example 6. Here too, we wanted a list of files. The name "Get-Childitem"

already says it all. If "C:\TEMP" is the "parent", then every object in it, are it's "children".

You should be able to have "an idea" how "$FilesCollection", after "Get-Childitem" did it's work, is a list of files

which must be processed. Next, a foreach loop will work through the list, and deletes the files.

Note:

A similar cmd shell command is:

C:\> forfiles /P C:\webserver\logs /S /M *.log /D -30 /C "cmd /c del @FILE"

This example will delete all *.log files in the "c:\webserver\logs" directory, which are older than 30 days.

It seems almost easier than using Powershell ! Well, No. You do not want to know what effort MS used to build the "forfiles" command.

With Powershell, your CmdLets and everything else that make up the environment (e.g. loop constructs, functions etc..)

all form endlessly more powerfull building blocks, to build anything you need.

Example 4.2: Viewing and managing a "Win2K8 / Win2K12 Failover Cluster".

Once you have installed a "Failover Cluster" on 2 or more Nodes, on each node, new Failover cluster CmdLets

are available on those systems.

However, it's possible you still need to load the failoverclusters powershell module before you can use those new CmdLets.

PS C:\> import-module failoverclusters

Let's take a look at a few of those new CmdLets. Here are some examples.

The commands shown, are only for illustrational purposes. In your particular situation, you often need to modify

such an example, before it really works (e.g. clustername, resource group name etc...), unless it's a generic command

which does not use a particular cluster object name (for example, create a Cluster log file).

=> 1. Create the Cluster log file:

Examples:

- Create the log into the current directory (.), over the last 400 minutes (that could be a large ascii file).

PS C:\> Get-ClusterLog -Destination . -TimeSpan 400

- Create the log into the current directory, over the last 10 minutes.

PS C:\> Get-ClusterLog -Destination . -TimeSpan 10

=> 2. Show cluster nodes:

PS C:\> Get-ClusterNode

Name..........State

----..........-----

node1.........Up

node2.........Up

If you are not connected to a certain Node of a certain cluster, or you want to see the state of the nodes

of another cluster, then supply the "clustername" to the CmdLet.

PS C:\> Get-ClusterNode -Cluster ClusterSales

=> 3. Show info of a Cluster (or names of all Clusters)

PS C:\> Get-Cluster

PS C:\> Get-Cluster cluster1

PS C:\> Get-Cluster | fl * (shows lots of info)

PS C:\> Get-Cluster -domain antapex.nl

=> 4. Show cluster quorum info:

PS C:\> Get-ClusterQuorum -Cluster SQLCluster1

=> 5. Move (failover) a Group to another node:

PS C:\> Move-ClusterGroup –Name SQLCLUS1 -Node node2

PS C:\> Get-ClusterNode node2 | Get-ClusterGroup | Move-ClusterGroup

=> 6. view resources for a certain Group:

PS C:\> Get-ClusterGroup "GROUPNAME" | Get-ClusterResource

=> 7. Start/Stop a certain Group:

PS C:\> Start-ClusterGroup SQLCLUS1

PS C:\> Stop-ClusterGroup SQLCLUS1

Example 4.3: Put quotes around the records of a (flat) .csv file.

Suppose that from a database, a report is extracted using some export utility. This utility produces a ".csv" file,

which is a "comma seperated" ascii file. Suppose that this export file is called "pre_report.csv" and it is created by

the export utility in the folder "F:\Uploads\".

Now, suppose that the records in "pre_report.csv" have a layout as in the following example:

Business Unit,Revenue,TotalCosts

NL,1000400,200562

US,200726,19925

BE,5999000,2001788

etc..

Generally, there could be hundreds, or thousends (or even millions) of rows in such a file.

Anyway, suppose further that the file is not complete for further processing.

What the requirement is, is that the records shown above, needs to be enclosed (surrounded) by quotes (").

(For the sake of argument, let's suppose that the database extracting utility is not able to do this).

So, we need this:

"Business Unit,Revenue,TotalCosts"

"NL,1000400,200562"

"US,200726,19925"

"BE,5999000,2001788"

etc..

One solution is, that we create a PowerShell script that operates on every record in "pre_report.csv",

put double quotes around every record, and places them in the new "report.csv" .csv file.

Take a look at the following script, which will do exactly that.

Remove-Item F:\Uploads\report.csv

$sym="`""

$list = Get-Content F:\Uploads\pre_report.csv

foreach ($v in $list)

{

Write-Output "$sym$v$sym" >> F:\Uploads\report.csv

}

As you can see, using the "Remove-Item" CmdLet, we start with removing the former "report.csv" file.

Next, we use a variable "$sym" which contains the " symbol. Such a character is a string really, so you would

normally enclose it using double quotes. But just using three double quotes (""") does not work.

Using it this way "`"", the special single quote will sort of "hide", or "escape", the second double quote, so it ends up

as if the second quote is just a normal string (enclosed by double quotes).

Next, we use the "Get-Content" CmdLet, to read in the "pre_report.csv", and put it in the $list variable.

Next, using the "foreach" loop, we process every record from "$list", meaning that we place double quotes

around every record, and store this new collection in the file "report.csv".

Example 4.4: Some AD examples.

When working on a Win2K8 DC, you likely have the "Active Directory" module available, which means that new specialized CmdLets are

at your disposal, from which you can easily add, delete, list accounts, groups, members in groups, computers, list OU's, etc.. etc..

With some preparations done, you can also use it from a Win7 workstation, or some management Server.

Here are a few simple examples.

List all user accounts in AD with their most interesting properties (like CN, enabled/disabled etc..):

get-aduser –filter * | export-csv "listing.csv"

List all user accounts in groups, with group names like FIN* (like Finance or any group else starting with "FIN":

# Users in All FIN* groups in user "Long" names (Distinguished Names)

# First get all groups with names like FIN*

$groups=get-ADGroup -Filter { name -like 'FIN*' } -Searchbase "DC=myorg,DC=org" | ForEach-Object {$_.SamAccountName}

# Find all Members in those FIN* groups:

foreach ($group in $groups){

get-adgroupmember $group | foreach-object { $_.Name} >> FINmembersFullNames.txt

}

# Same script, but now Members of All FIN* groups in in Short names (SAM Names)

# First get all groups with names like FIN*

$groups=get-ADGroup -Filter { name -like 'FIN*' } -Searchbase "DC=myorg,DC=org" | ForEach-Object {$_.SamAccountName}

# Find all Members in those FIN* groups:

foreach ($group in $groups){

get-adgroupmember $group | foreach-object { $_.SamAccountName} >> FINmembersSAMNames.txt

}

Example 4.5: Loop through a text file with accounts and assign file system permissions.

Suppose you have a text file with user accounts and other data. Now, using that file, you need to grant

permissions to some filesystem directory, using "icacls", for the user accounts that are listed in that file.

Just suppose we need to create user home directories, and give every user full control on it's private directory,

as well as making the user the "owner" of it's private directory.

Suppose we have the following file, called "newaccounts.txt":

12335 user1 Amsterdam

23769 user2 New York

67244 user3 Berlin

Then, using

Get-Content newaccounts.txt | %{$data=$_.split(" "); Write-Output "$($data[1])"} > acctmp.txt

produces the file "acctmp.txt", with content like this:

user1

user2

user3

Using "Write-Output "$($data[1])"}", only the second column (column #1 since the array starts with "0") will be printed.

We can make the code better, if we first test if a former "acctmp.txt" already exists, and if so, delete it first.

So, we could start with this block:

if (Test-Path c:\test\acctmp.txt)

{

Remove-Item c:\test\acctmp.txt

}

So, the CmdLet "Test-Path" can be used to test if a directory or file exists. If "true" the code between { } will be executed.

Note that the "test" is actually useless in our case, since later on we create "acctmp.txt" using single redirection ">",

which will always overwrite a former file (if it existed already).

However, in many other scripts the CmdLet "Test-Path" can be very usefull.

Now, our full script could look like this:

if (Test-Path c:\test\acctmp.txt)

{

Remove-Item c:\test\acctmp.txt

}

Get-Content newaccounts.txt | %{$data=$_.split(" "); Write-Output "$($data[1])"} > acctmp.txt

$list = Get-Content c:\test\acctmp.txt

foreach ($login in $list)

{

New-Item \\SERVER\users\$login -itemtype directory

$acl = Get-Acl \\SERVER\users\$login

$permission = "YOURDOMAIN\$login","FullControl","Allow"

$accessRule = New-Object System.Security.AccessControl.FileSystemAccessRule $permission

$acl.SetAccessRule($accessRule)

$acl | Set-Acl \\SERVER\users\$login

icacls \\SERVER\users\$login /setowner "YOURDOMAIN\$login" /T

}

The first few lines we have already discussed.

Next, we start a loop using the records in "$list" which contains all accounts from "acctmp.txt".

Using the CmdLet "New-Item" we create the users private Home directory.

Then, we define a series of variables which essentialy are use to transfer the value "FullControl"

to the second last statement, where "Set-Acl" is "feeded" with "FullControl" which gets applied to

the user's Home dir "\\SERVER\users\$login".

Finally, the last statement uses "icacls" to make the user the owner of it's private Home dir.

Example 4.6: Compare txt files (.txt, .csv etc...)

There are ofcourse many ways to do that. In one approach, we might use the Compare-Object CmdLet.

Here are just a few examples (or no more than a few statements, actually):

PS C:\temp> Compare-Object -ReferenceObject (Get-Content .\ReportJohn.csv) -DifferenceObject (Get-Content .\ReportMary.csv)

other similar example (could be in a script):

$RefFile = Get-Content "C:\Temp\ReportJohn.txt"

$DiffFile = Get-Content "C:\Temp\ReportMary.txt"

Compare-Object $RefFile $DiffFile

So, if you would have "C:\Temp\ReportJohn.txt" as having the content:

a

b

c

d

e

And if you would have "C:\Temp\ReportMary.txt" as having the content:

a

b

c

Then running the command (or script) would show you "d" and "e" as the difference.

Note: the DOS "fc" command in order to compare (ascii or binary) files, is really very easy to use:

C:\TEMP> fc /L ReportJohn.txt ReportMary.txt

Note: Use "fc /?" to get more info on the command switches.

Indeed, in just a few cases DOS commands work even better than the equivalent Powershell.

But I haste me to say that PowerShell is much more "powerful" and has a much wider scope, compared to DOS.

If you look at the last powershell example, and put those 3 lines in a ".ps1" scriptfile, then it works for the

ReportJohn.txt and ReportMary.txt files, But that's quite ugly. It's much better to have a script

that uses parameters to be supplied, so that you can re-use it for other (similar) files as well.

Do you know how to do that? Yes..., if you indeed have read this note, you do.

Example 4.7: Calling a stored procedure, or starting a SQL Agent job, from Powershell. (Works, but a bit outdated).

The following shows a very simple example. You need to know which stored procedure you want to call,

or which SQL Server Agent job you want to start, and ofcourse which database and Instance are involved.

Example:

$instancename="MySQLServer"

$db="MyDataBse"

$sqlConnection = new-object System.Data.SqlClient.SqlConnection

$sqlConnection.ConnectionString = 'server=' + $instancename + ';integrated security=TRUE;database=' + $db

$sqlConnection.Open()

$sqlCommand = new-object System.Data.SqlClient.SqlCommand

$sqlCommand.CommandTimeout = 120

$sqlCommand.Connection = $sqlConnection

$sqlCommand.CommandText= "exec msdb.dbo.sp_start_job 'My SQL Agent job'"

Write-Host "Executing the job..."

$result = $sqlCommand.ExecuteNonQuery()

$sqlConnection.Close()

In the example below we use a call to a stored procedure from Powershell.

Here we call the stored procedure "msdb.dbo.performancevlpi , which performs some stuff,

but also returning a variable which contains the end status of the performed work.

$Logfile = "\\fileshare\dfs\jobs\albert.log"

$instancename="SVRSQL05"

$db="DataMart"

$myoutput=""

Write-Host "Job is running. Please do not close this window"

$sqlConnection = new-object System.Data.SqlClient.SqlConnection

$sqlConnection.ConnectionString = 'server=' + $instancename + ';integrated security=TRUE;database=' + $db

$sqlConnection.Open()

$sqlCommand = new-object System.Data.SqlClient.SqlCommand

$sqlCommand.CommandTimeout = 3600

$SqlCommand.CommandText = "msdb.dbo.performancevlpi"

$SqlCommand.Connection = $SqlConnection

$SqlCommand.CommandType = [System.Data.CommandType]'StoredProcedure';

$outParameter = new-object System.Data.SqlClient.SqlParameter;

$outParameter.ParameterName = "@answer";

$outParameter.Direction = [System.Data.ParameterDirection]'Output';

$outParameter.DbType = [System.Data.DbType]'String';

$outParameter.Size = 2500;

$SqlCommand.Parameters.Add($outParameter) >> $null;

$result = $SqlCommand.ExecuteNonQuery();

$myoutput = $SqlCommand.Parameters["@answer"].Value;

$myoutput;

$SqlConnection.Close();

If ($myoutput -eq 1)

{

Write-Output "The job completed succesfully" | Out-File $logfile

}

else

{

Write-Output "The job failed" | Out-File $logfile

}

Example 4.8: Finding the most recent file in some folder.

Example 1:

$dir = "c:\test"

$latest = Get-ChildItem -Path $dir | Sort-Object LastAccessTime -Descending | Select-Object -First 1

$latest.name

Example 2:

$f = Dir | Sort CreationTime -Descending | Select Name -First 1

$f.name

Example In DOS (cmd):

Suppose we are using d:\test:

FOR /F "delims=|" %%I IN ('DIR "d:\test\*.*" /B /O:D') DO SET NewestFile=%%I

echo %NewestFile%

4.9: Rename file(s).

Example 1:

$datetime = get-date -f MMddyy-hhmmtt

rename-item d:\test\daily.bak -newname ($datetime + ".bak")

Example 2:

Get-ChildItem -Filter “*.bak” -Recurse | Rename-Item -NewName {$_.name -replace '.bak','.archive' }

Get-ChildItem *.dmp | Rename-Item -NewName { $_.Name -replace '.dmp','.bak' }

Example In DOS (cmd):

ren *.doc *.archive

ren a.txt b.txt

ren f:\data\sales.xls sales_2015.xls (or better: copy f:\data\sales.xls g:\backups\sales_2015.xls)

4.10: "Tail" or Show, the end of large txt files (like large logfiles)..

In unix/linux, it's easy to see the last lines of some txt file (like a logfile). Simply use the tail command, like:

$ tail -50 alert.log

which shows you the last 50 lines of that logfile. You can use any number, like 50, 120, 200 etc..

In Powershell we have a similar commandlet. Use "Get-Content" with the "-tail" option.

Example:

PS C:\LOGS> get-content -tail 100 '.\web.log'

Here, the ".\" means that the CommandLet must use the current directory.

Chapter 5. Powershell and SQL Server.

5.1 Powershell versions

It seems that every new Windows version, comes with a new Powershell version. Also, in between, sometimes "interfaces"

to applications, and the OS, may be "upgraded" too. Backward compatibility of new versions to older scripts is very high,

although in some cases you must indeed choose to use a newer interface.

For about Windows versions, the following crude overview holds:

Version............Most prominent OS, in which it was first implemented

PowerShell 1.0.... Windows Server 2008, and Later XP versions (XP2)

PowerShell 2.0.... Windows Server 2008 and R2, Win7

PowerShell 3.0.... Windows Server 2012, Win8, Win7 SP1

PowerShell 4.0.... Windows Server 2012 R2, Win7, Win8.1

PowerShell 5.0.... Windows Server 2016, Win10

PowerShell 5.1.... Windows Server 2016, Win10 latest build

PowerShell 6.0.... comes available Win/Linux

If you look at figure 1 above, you see that Powershell itself runs on .Net, and has

a strong dependency on "WMI", that is Windows Management Instrumentation.

Through WMI, Powershell accesses objects of the OS and registered devices.

For example, although PowerShell 5 and WMI 5 are not equivalent, it is true that a more modern

Powershell, like Powershell v5, needs a certain revision of WMI too. It means also that you can use for example,

Powershell v5 on Win7, but then you need updates on WMI and .Net too.

You can check your version using:

PS C:\TEMP> $PSVersionTable.PSVersion

returns compact information on builds

PS C:\TEMP> get-host

returns information general version and language (culture).

If the variable $PSVersionTable.PSVersion is not available, you have v1/v2, and you may check that with "get-version".

As of SQL Server 2008, "direct" interfacing from Powershell to SQL Server, was possible, using

the sqlps.exe "wrapper", which starts Powershell, with the PowerShell snap-ins to get loaded and registered.

From that point, you could for example use the "Invoke-Sqlcmd" CmdLet.

At later SQL Server versions, as of 2012, the "sqlps" module could be loaded, enabling a larger set

of CmdLet's. As of SQL 2016, this module was enhanced and renamed to "SqlServer".

5.2 Powershell interfaces to SQL Server.

ADO.NET:

Since .Net became popular, ADO.NET is a "basic database API" to access a variety of RDBM'ses, like Oracle and SQL.

The namespace (provider) "System.Data.SqlClient", is well known by .NET developers.

So, for the older SQL Server 2008 version, for example, it was quite common to develop web apps, or C#, or VB .NET apps,

using ADO.NET to access databases.

ADO.NET can be used by various development platforms, and by Powershell too, like for example in the basic code below:

$scon = New-Object System.Data.SqlClient.SqlConnection

$scon.ConnectionString = "Data Source=SQL01\SQLINST1;Initial Catalog=sales;Connection Timeout=0;Integrated Security=true"

$cmd = New-Object System.Data.SqlClient.SqlCommand

$cmd.Connection = $scon

$cmd.CommandText = "SELECT * FROM customers"

$cmd.CommandTimeout = 0

Basically, example 4.7 above, uses ADO.NET too.

The method can be used by modern apps and modern SQL versions too. But Powershell has evolved a bit,

so other methods for access to databases, using rather simple CmdLet's, is possible too.

The older SQLPS and newer SqlServer modules:

With later SQL Server versions, using the graphical Management Studio, you can activate Powershell,

or actually "sqlps".

This module makes it possible to manage SQL Server objects from Powershell, and "traverse" the SQL Server hierarchy

of objects, just like a "filesystem" (using cd, ls etc..).

From the powershell "shell" itself, you can load the module too. Then use:

PS C:\TEMP> Import-Module -Name sqlps -DisableNameChecking

Apart from the feature that you can "walk" or "traverse" the SQL Server hierarchy, it also

makes a range of SQLserver CmdLet's available, like "Backup-SqlDatabase", "Restore-SqlDatabase" and many more.

SQLPS uses the "SQL Server Management Object" interface, or "SMO".

There are actually two SQL Server PowerShell modules, namely "SqlServer" (newer) and "SQLPS" (older).

The SQLPS module is/was included with the SQL Server installation, but is nowadays regarded

as "the former module". The most up-to-date PowerShell module today is the "SqlServer" module.

However, for the "SqlServer" installable module, Powershell v5 seems to be a requirement.

With SQL 2016, the "SqlServer" module seems to be automatically installed with the seperate SSMS (Management Studio) installation.

In most cases, it seems to reside in "%Program Files\WindowsPowerShell\Modules\SqlServer".

In that case simply give the command:

PS C:\TEMP> Import-Module -Name SqlServer

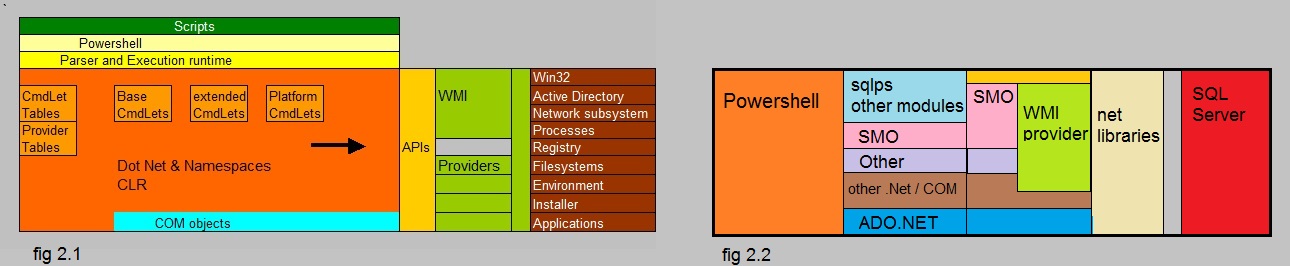

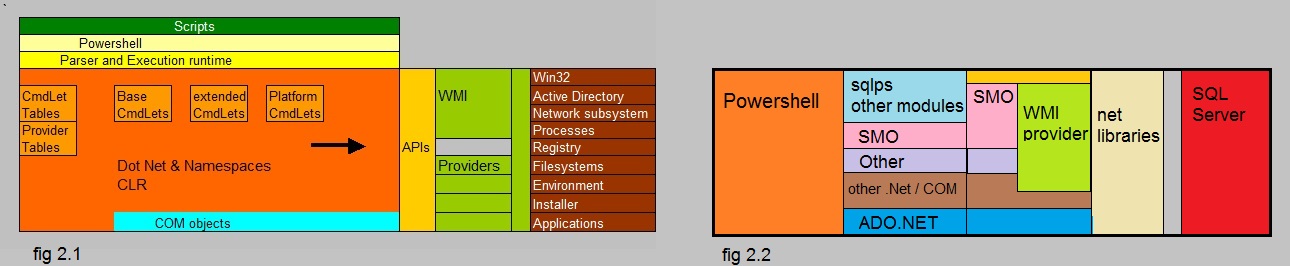

5.3 Powershell Architecture with API's .

Below you see a Jip-Janneke figure, trying to illustrate how powershell might access

other parts of the system (like the OS, Applications, SQL Server etc..).

The first sub-figure, is the same as figure 1, above. The second sub-figure, tries to show

the different API's to connect to SQL Server.

Fig 2. Simplified architecture Powershell.

Both figures are simply a very high-level overview. For example, the details of "WMI" are not shown.

5.4 SQL CmdLet's .

If you have imported "sqlps" or "SqlServer" modules, as shown above, a range of SQL Server specific

CmdLet's are available.

Example:

PS SQLSERVER:\SQL\Computer\Instance> Backup-SqlDatabase -Database "SALES"

To see all installed CmdLet's, try:

PS SQLSERVER:\SQL\Computer\Instance> get-command -module SQLSERVER

An overview of all CmdLet's from the imported "SqlServer" module, can be found in this Microsoft page:

SQL Server PowerShell cmdlets

That's it. Hope you liked it !